Linear regression models

Tropical Biology 2022

Organization for

Tropical Studies

Organization for Tropical Studies

Marcelo

Araya-Salas, PhD

“2022-06-17”

Linear models as a unifying framework

Traditionally, statistical models have been taught as disconnected tools with no clear relationship between them. Take a look at a classic biology stats book (Sokal & Rolhf):

However, most of those common statistical models are just special cases of linear models. Hence, learning them as such can largely simplify things. This approach has several advantages:

First of all, it all comes down to y=a⋅x+b, which largely simplify learning

This also means that there is no need to learn about parameters, assumptions, and result interpretation for every single special case

Linear models have been extended to account for complex distributions and data structures (e.g. mixed models, generalized linear models, zero-inflated models, etc) providing a more flexible platform.

Linear models are applied across statistical paradigms (e.g. frequentist, bayesian)

Purpose

Understand statistical inference through a single modelling tool (broad sense linear models)

Get familiar with building linear models

Extent linear models to different data structures

Workshop overview

A few tips

Feel free to ask questions at any time

Try to run the code yourself (if you don’t have much experience in R try to sit with someone that does)

The line of the code blocks are numbered so we can refer to specific part of the code, and the code can be copied with the button on the upper right corner of the box:

Please load the following packages:

How to simulate data

Generating random numbers in R

Statistics allow us to infer patterns in the data. We tend to use real data sets to teach stats. However, it might get circular to understand the inner working of an statistical tool by testing its ability to infer a pattern that we are not sure its found in the data (and have no idea on the mechanism producing that pattern). Simulations allow us to create controlled scenarios in which we know for sure the patterns present in the data and the underlying processes that generated them.

R offers some basic functions for data simulation. The most used ones

are the random number generating functions. The names of these functions

all start with r (r____()). For instance

runif():

The output is a numeric vector of length 1 thousand

(n = 100):

## [1] 9.88909298 3.97745453 1.15697779 0.69748679 2.43749391 7.92010426

## [7] 3.40062353 9.72062501 1.65855485 4.59103666 1.71748077 2.31477102

## [13] 7.72811946 0.96301542 4.53447770 0.84700713 5.60665867 0.08704600

## [19] 9.85737091 3.16584804 6.39448942 2.95223235 9.96703694 9.06021320

## [25] 9.88739144 0.65645739 6.27038758 4.90475041 9.71024413 3.62220848

## [31] 6.79993461 2.63719930 1.85714261 1.85143222 3.79296747 8.47024392

## [37] 4.98076133 7.90585574 8.38463872 4.56903865 7.99475815 3.81943061

## [43] 7.59701231 4.36775602 9.04217721 3.19534914 0.82569093 8.16289079

## [49] 8.98476221 9.66496398 5.73068883 7.20079497 7.74058624 6.27760801

## [55] 7.22989341 3.86831279 1.62790778 1.87228283 3.91249474 2.73901210

## [61] 1.91917748 5.04391806 7.63840357 6.93668871 5.44054188 6.59087226

## [67] 4.68728380 4.81805539 3.37063598 4.24526302 2.87015131 6.01191532

## [73] 8.40742326 6.20837048 1.34551619 5.67722430 4.43426331 4.37975423

## [79] 6.23617233 9.32653342 8.88492583 8.78540561 2.42176948 7.41453797

## [85] 3.87656313 0.78951739 0.94835550 7.62142731 3.47894026 4.16766709

## [91] 3.44016231 0.08410923 9.11574991 1.82205419 7.22803449 5.71963331

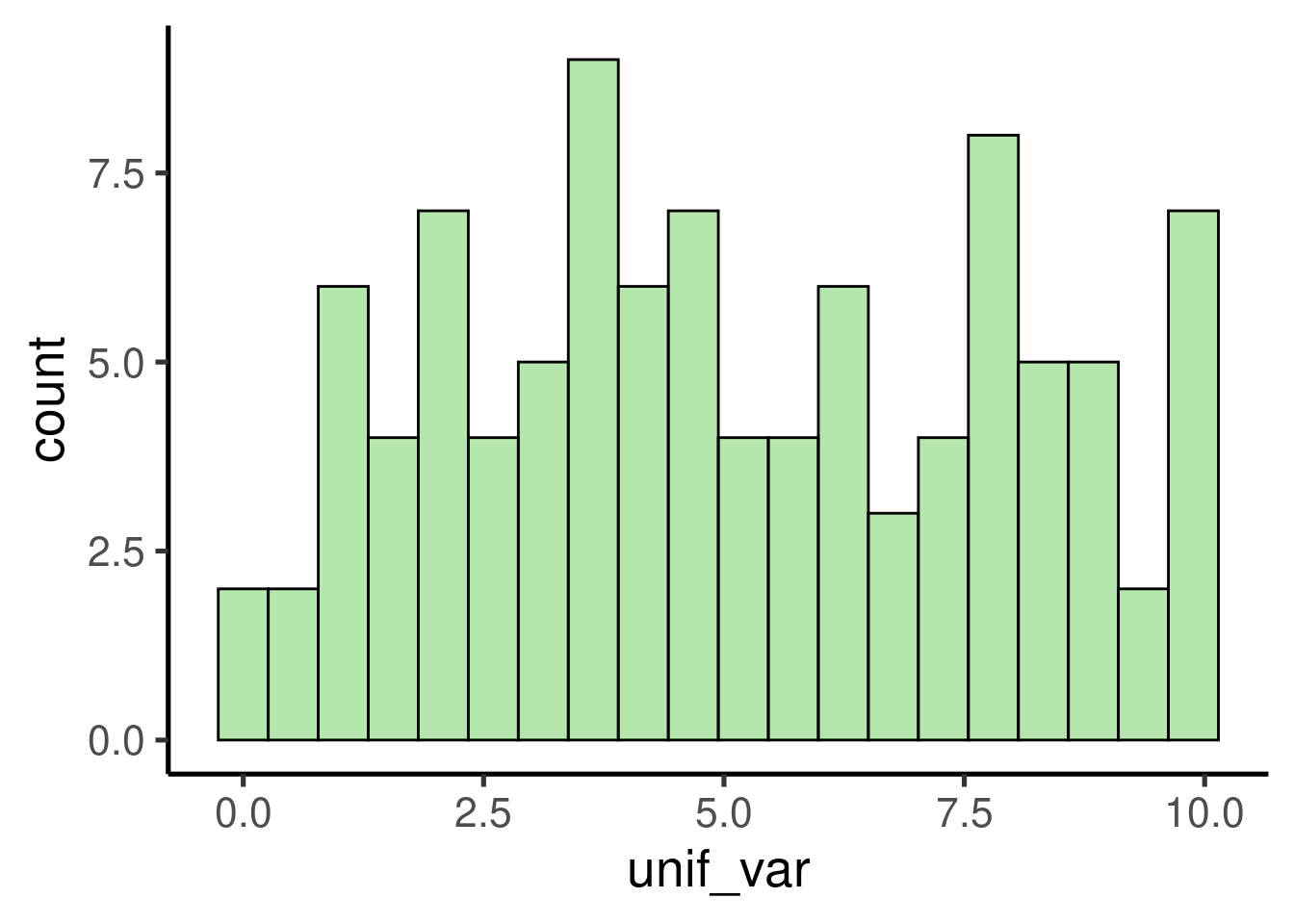

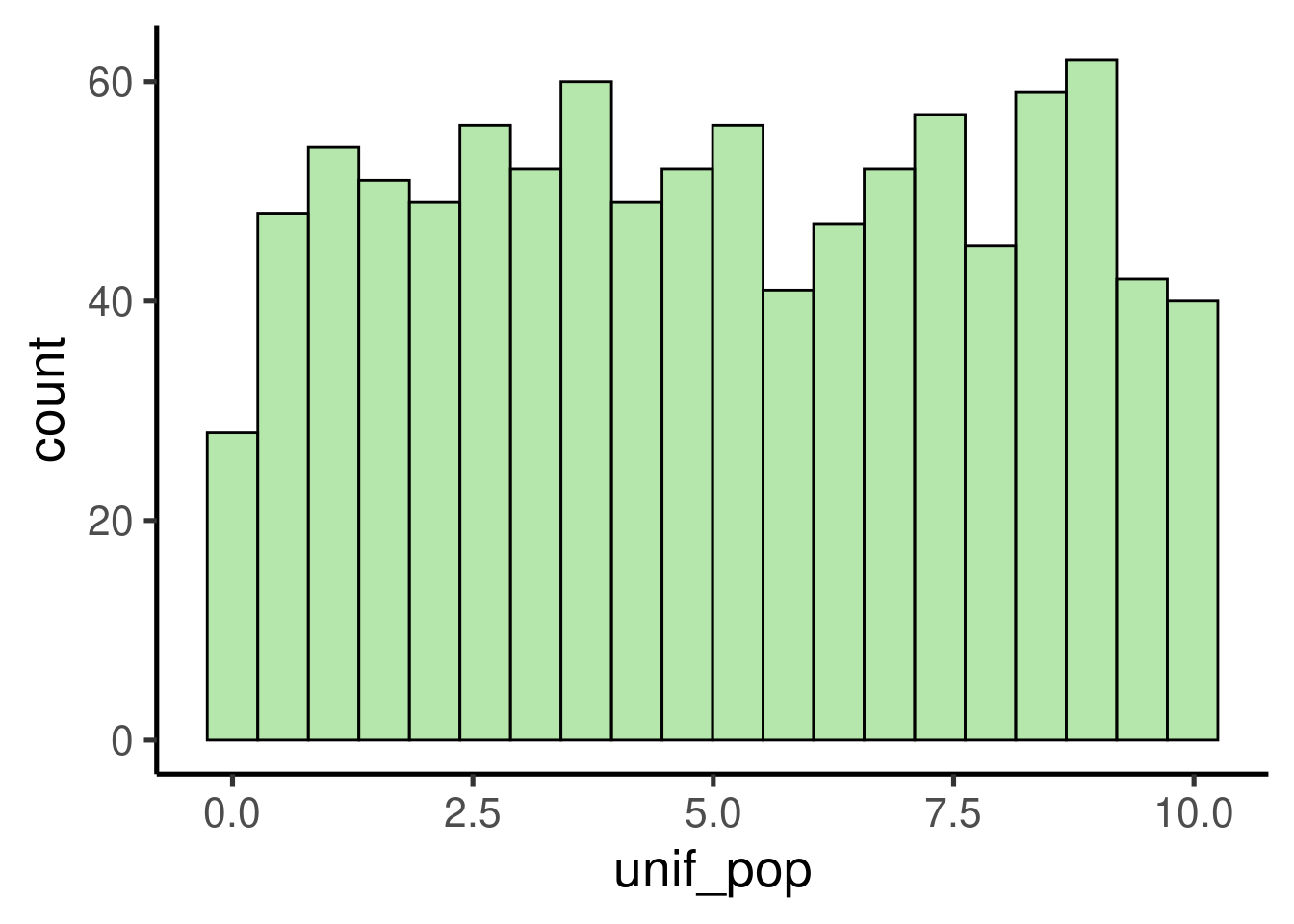

## [97] 5.40036414 3.54947415 8.24091838 1.86136761We can explore the output by plotting a histogram:

# create histogram

ggplot(data = data.frame(unif_var), mapping = aes(x = unif_var)) +

geom_histogram()

It shows a uniform distribution ranging from 0 to 10.

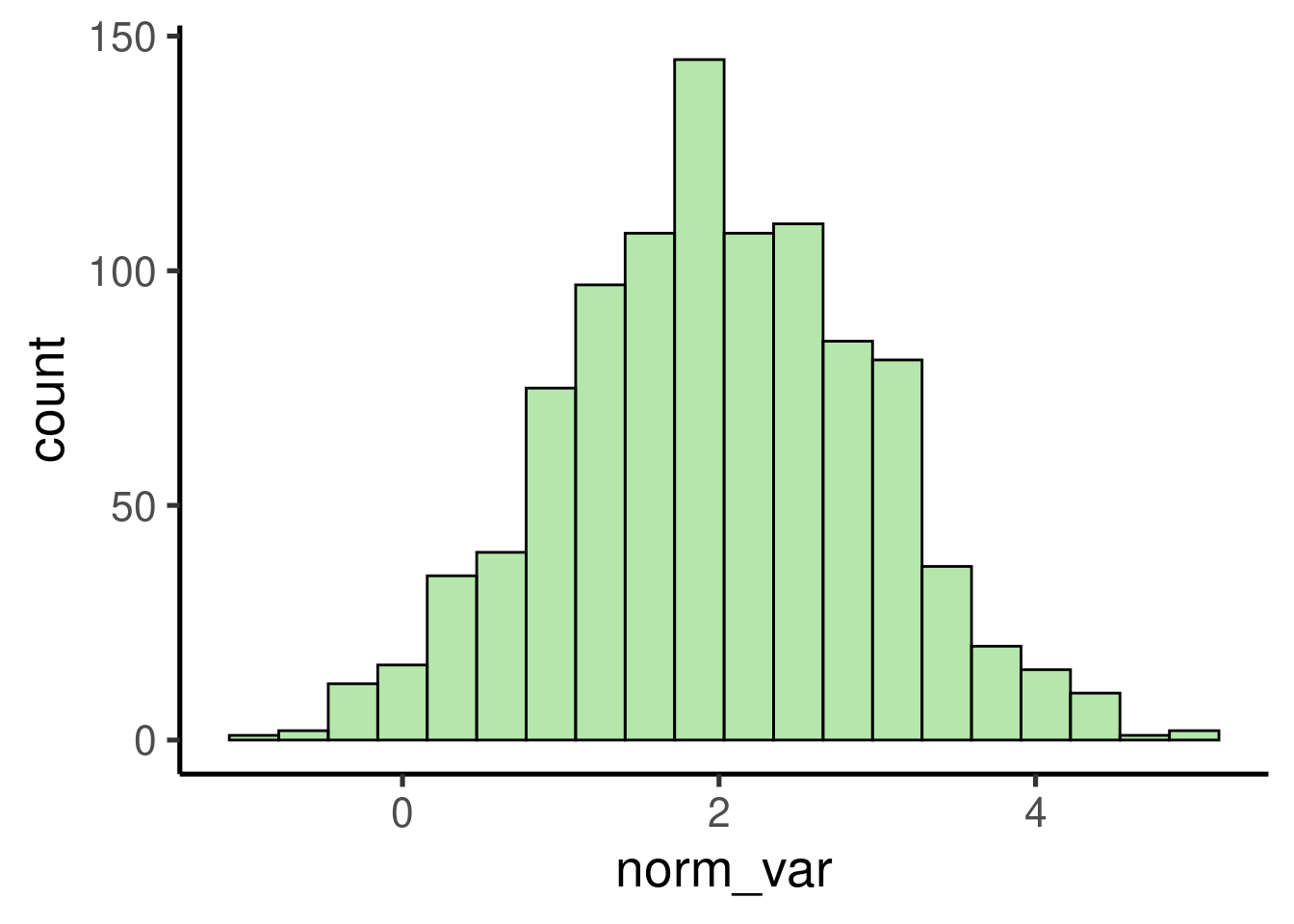

We can also simulate random numbers coming from a normal distribution

using rnorm():

# create random variable

norm_var <- rnorm(n = 1000, mean = 2, sd = 1)

# plot histogram

ggplot(data = data.frame(norm_var), mapping = aes(x = norm_var)) +

geom_histogram()

Note that random number generating functions all have the argument ‘n’, which sets the length of the output vector (i.e. number of random numbers), plus some additional arguments related to specific parameters of the distribution.

Continuous variables (i.e. numeric vectors) can be converted to discrete variables (i.e. integer numbers) simply by rounding them:

## [1] 15.426886 8.544965 15.138700 8.920290 9.369333## [1] 15 9 15 9 9

Exercise

What do the functions

rbinom()andrexp()do? (tip: run?rexp)Run them and make histograms of their output

What do the arguments ‘mean’ and ‘sd’ in

rnorm()do? Play with different values and check the histogram to get a sense of their effect in the simulation

Generating categorical variables

The easiest way to generate categorical variables is to use the

‘letters’ (or ‘LETTERS’) example vector to assign category levels. We

can do this using the function rep(). For instance, the

following code creates a categorical (character) vector with two levels,

each one with 4 observations:

## [1] "a" "a" "a" "a" "b" "b" "b" "b"

We can also replicate this pattern using the argument ‘times’. This code replicates the previous vector 2 times:

## [1] "a" "a" "a" "a" "b" "b" "b" "b" "a" "a" "a" "a" "b" "b" "b" "b"

Another option is to simulate a variable from a binomial distribution and then convert it into a factor:

## [1] 1 0 0 1 1 0 0 0 0 0 0 0 1 1 1 0 0 1 0 1 0 0 0 0 0 0 0 1 0 1 1 1 0 0 0 1 0 0

## [39] 1 1 0 0 0 0 0 1 0 1 1 1## [1] b a a b b a a a a a a a b b b a a b a b a a a a a a a b a b b b a a a b a a

## [39] b b a a a a a b a b b b

## Levels: a b

Random sampling

The other important R tool for playing with simulated data is

sample(). This function allows you to take samples of

specific sizes from vectors. For instance, take the example vector

letters:

## [1] "a" "b" "c" "d" "e" "f" "g" "h" "i" "j" "k" "l" "m" "n" "o" "p" "q" "r" "s"

## [20] "t" "u" "v" "w" "x" "y" "z"

We can take a sample of this vector like is:

## [1] "i" "r" "q" "a" "c" "m" "y" "z" "u" "v"

The argument ‘size’ allow us to determine the size of the sample. Note that we get an error if the size is larger than the vector itself:

## Error in sample.int(length(x), size, replace, prob): cannot take a sample larger than the population when 'replace = FALSE'

This can only be done when sampling with replacement. Sampling with

replacement can be applied by setting the argument

replace = TRUE:

## [1] "j" "i" "h" "i" "k" "u" "n" "i" "w" "k" "c" "t" "m" "p" "i" "h" "d" "w" "c"

## [20] "q" "j" "e" "e" "j" "k" "c" "l" "t" "r" "h"

Iterating a process

Often simulations most be repeated several times to rule out spurious

results due to chance or just to try different parameters. The functions

for simulating data mentioned above can be run several times

(e.g. iterated) using the function replicate():

## [1] "list"## [[1]]

## [1] 1.1918356 -0.3399238

##

## [[2]]

## [1] 0.7891082 -0.6321324

##

## [[3]]

## [1] -1.4931226 -0.1344123

Making simulations reproducible

The last trick we need to run simulations in R is the ability to

reproduce a simulation (i.e. get the exact same simulated data and

results). This can be useful for allowing other researchers to run our

analyses in the exact same way. This can be easily done with the

function set.seed(). Try running the following code. You

should get the same output:

## [1] 0.5074782 0.3067685Creating data sets

Datasets with numeric and categorical data

Now that we know how to simulate continuous and categorical variable.

We can put them together to create simulated data sets. This can be done

using the function data.frame():

# create categorical variable

group <- rep(letters[1:2], each = 3)

# create continous data

size <- rnorm(n = 6, mean = 5, sd = 1)

# put them together in a data frame

df <- data.frame(group, size)

# print

df| group | size |

|---|---|

| a | 4.8157 |

| a | 3.6287 |

| a | 4.4008 |

| b | 5.2945 |

| b | 5.3898 |

| b | 3.7919 |

Of course, we could add more variables to this data frame:

# create categorical variable

group <- rep(letters[1:2], each = 3)

individual <- rep(LETTERS[1:6])

# create continous data

size <- rnorm(n = 6, mean = 5, sd = 1)

weight <- rnorm(n = 6, mean = 100, sd = 10)

# put them together in a data frame

df <- data.frame(group, individual, size, weight)

# print

df| group | individual | size | weight |

|---|---|---|---|

| a | A | 4.6363 | 109.8744 |

| a | B | 3.3733 | 107.4139 |

| a | C | 4.7435 | 100.8935 |

| b | D | 6.1018 | 90.4506 |

| b | E | 5.7558 | 98.0485 |

| b | F | 4.7618 | 109.2552 |

And that’s a simulated data set in its most basic form. That looks a lot like the kind of data we use to work with in the biological science.

How to use simulated data to understand the behavior of statistical tools

A proof of concept: the Central Limit Theorem

The Central Limit Theorem states that, if we take repeated random samples of a population, the means of those samples will conform to a normal distribution, even if the population is not normally distributed. In addition, the resulting normal distribution must have a mean close to the population’s mean. The theorem is a key concept for inferential statistics as it implies that statistical methods that work for normal distributions can be applicable to many problems involving other types of distributions. Nonetheless, the point here is only to showcase how simulations can be used to understand the behavior statistical methods.

To check if those basic claims about the Central Limit Theorem hold true we can use simulated data in R. Let’s simulate a 1000 observation population with a uniform distribution:

# simulate uniform population

unif_pop <- runif(1000, min = 0, max = 10)

# check distribution/ plot histogram

ggplot(data = data.frame(unif_pop), mapping = aes(x = unif_pop)) +

geom_histogram()

We can take random samples using sample() like this:

## [1] 9.2841984 1.0262565 2.5751741 3.3248460 6.8998985 2.2940436 0.3373657

## [8] 8.2136568 3.3036362 8.0379320 2.5917392 7.8177006 5.6542576 0.6383126

## [15] 2.8347032 4.2043442 4.7632983 4.4219338 6.9782974 7.9262477 0.6812050

## [22] 3.5232307 6.5110308 5.3828892 7.9721010 1.8006177 4.2128214 3.3386579

## [29] 8.9122284 4.7116278

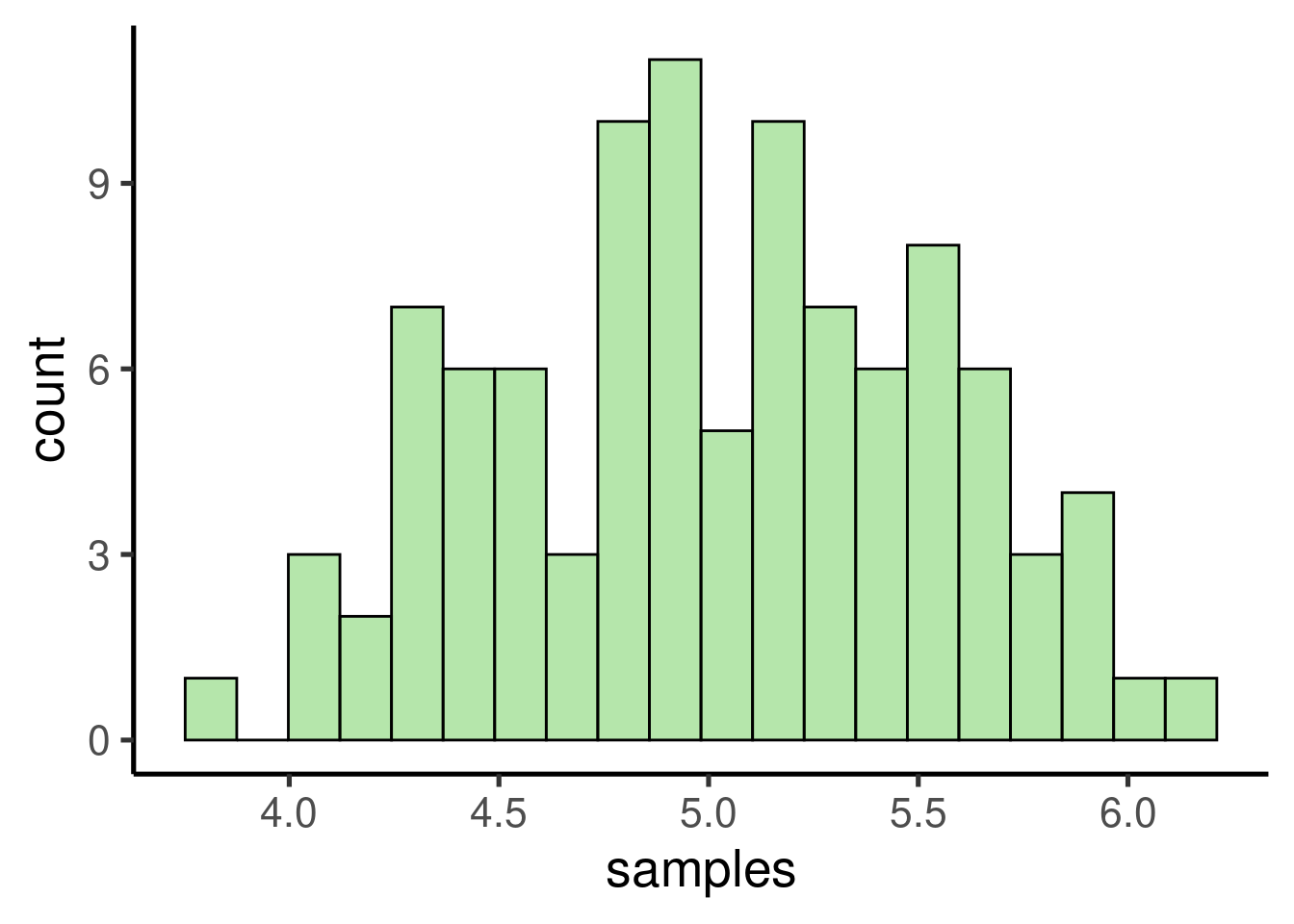

This process can be replicated several times with

replicate():

The code above takes 100 samples with 30 values each. We can now check the distribution of the samples:

# check distribution/ plot histogram

ggplot(data = data.frame(samples), mapping = aes(x = samples)) + geom_histogram()

… as well as the mean:

## [1] 5.021219

As expected, the samples follows a normal distribution with a mean close to the mean of the population, which is:

## [1] 5.052715

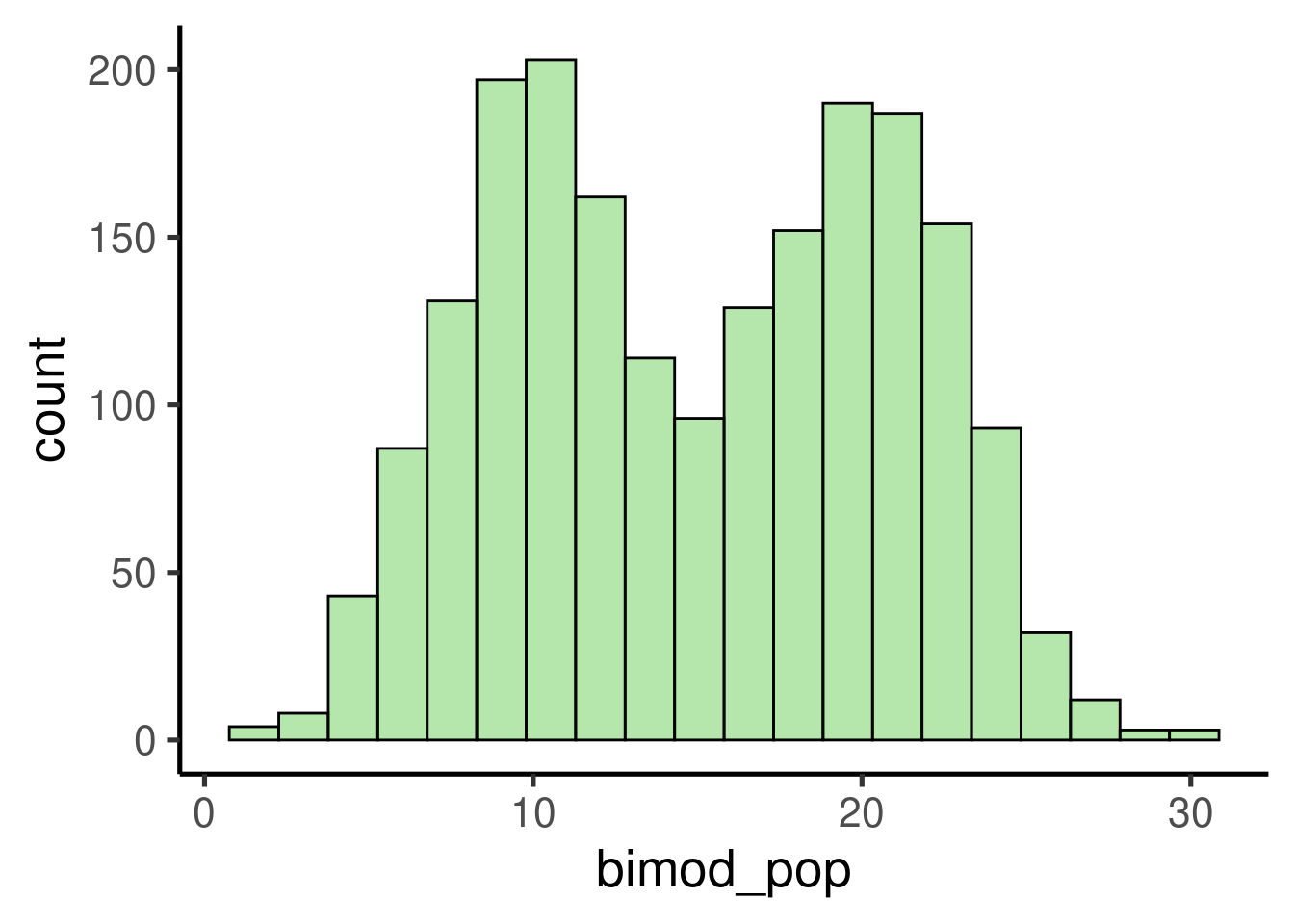

Let’s try with a more complex distribution. For instance, a bimodal distribution:

# set seed

set.seed(123)

# simulate variables

norm1 <- rnorm(n = 1000, mean = 10, sd = 3)

norm2 <- rnorm(n = 1000, mean = 20, sd = 3)

# add them in a single one

bimod_pop <- c(norm1, norm2)

# check distribution/ plot histogram

ggplot(data = data.frame(bimod_pop), mapping = aes(x = bimod_pop)) +

geom_histogram()

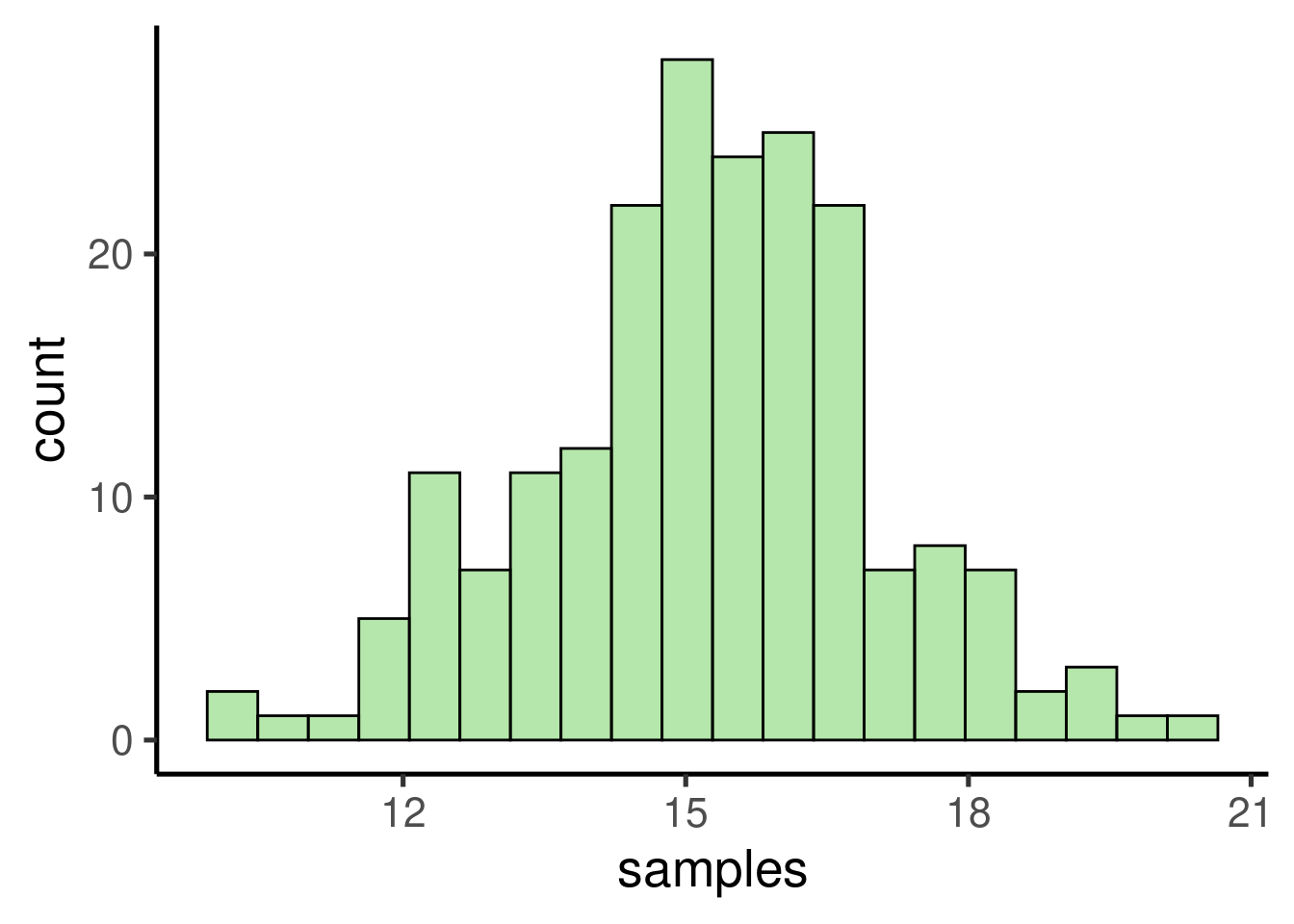

samples <- replicate(200, mean(sample(bimod_pop, 10)))

# check distribution/ plot histogram

ggplot(data = data.frame(samples), mapping = aes(x = samples)) + geom_histogram()

## [1] 15.23058## [1] 15.08789

Exercise

Try exploring the Central Limit Theorem as above but this time using:

- An exponential distribution (

rexp()) - A binomial distribution (

rbinom())

- An exponential distribution (

- For each distribution: plot a histogram and compare the means of the population and the samples

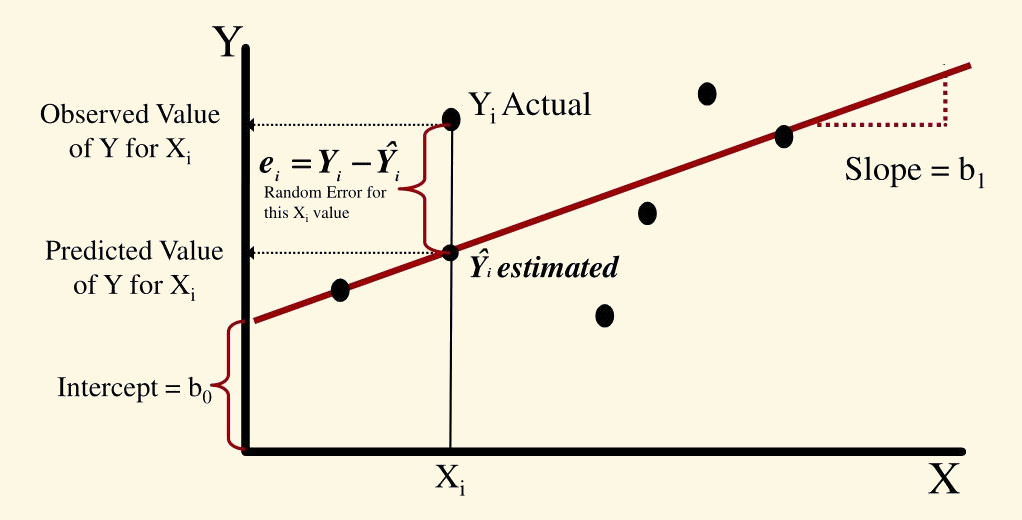

Linear regressions are based on the linear equation a = mx + b we learned in high school. The formal representation looks like this:

\(\hat{Y}\): response variable

\(\beta_{o}\): intercept (y value)

\(\beta_{1}\): estimate of the magnitude of the effect of \(x_{1}\) on \(\hat{Y}\) (a.k.a. effect size, coefficient or simply the ‘estimate’)

\(x_{1}\): predictor variable

The most common goal of a linear regression is estimating the \(\beta_{*}\) values. This is achieved by finding the best fitting straight line representing the association between a predictor and the response:

Those \(\beta_{*}\)s are the estimated effect size of the correspondent predictor (e.g. \(\beta_{1}\) is the effect size \(x_{1}\)). Their value represent the mean change in \(\hat{Y}\) (in \(\hat{Y}\) units) for a unit of change in \(\beta_{*}\). Hence the null hypothesis is that those \(\beta_{*}\)s are not different from 0:

which is equivalent to this:

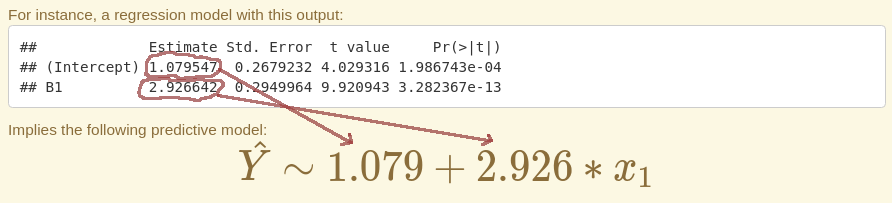

Linear regression in R

To take full advantage of linear models we need to feel comfortable

with them. We will do this by exploring R’s linear regression function

lm(). In R most linear models and their extensions share

common data input and output formats which makes easy to apply them once

we understand their basics.

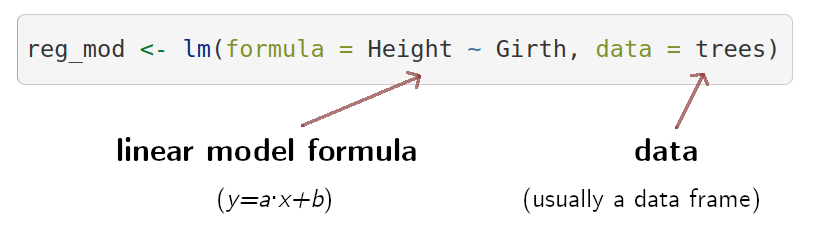

We will use the data set ‘trees’ that comes by default with R. ‘trees’ provides measurements of the diameter (labeled as ‘Girth’), height and volume of 31 felled black cherry trees:

| Girth | Height | Volume |

|---|---|---|

| 8.3 | 70 | 10.3 |

| 8.6 | 65 | 10.3 |

| 8.8 | 63 | 10.2 |

| 10.5 | 72 | 16.4 |

| 10.7 | 81 | 18.8 |

| 10.8 | 83 | 19.7 |

The basic R function to build a linear model is lm().

Let’s look at the basic components of a regression model using

lm():

We can fit this model to look at the output:

##

## Call:

## lm(formula = Height ~ Girth, data = trees)

##

## Residuals:

## Min 1Q Median 3Q Max

## -12.5816 -2.7686 0.3163 2.4728 9.9456

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 62.0313 4.3833 14.152 1.49e-14 ***

## Girth 1.0544 0.3222 3.272 0.00276 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 5.538 on 29 degrees of freedom

## Multiple R-squared: 0.2697, Adjusted R-squared: 0.2445

## F-statistic: 10.71 on 1 and 29 DF, p-value: 0.002758

This is what the elements of the output mean:

Call: the function and parameters were used to create the model

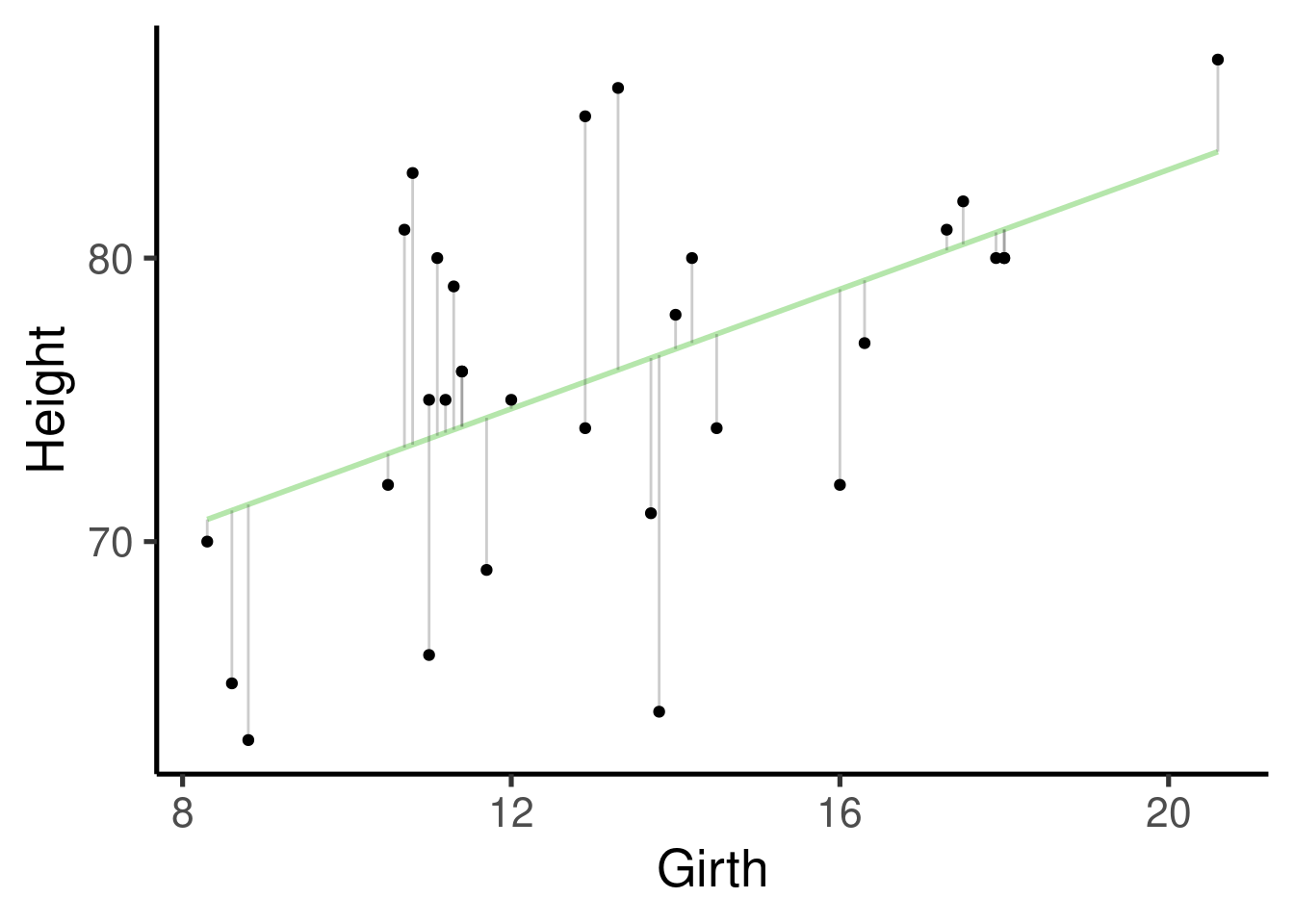

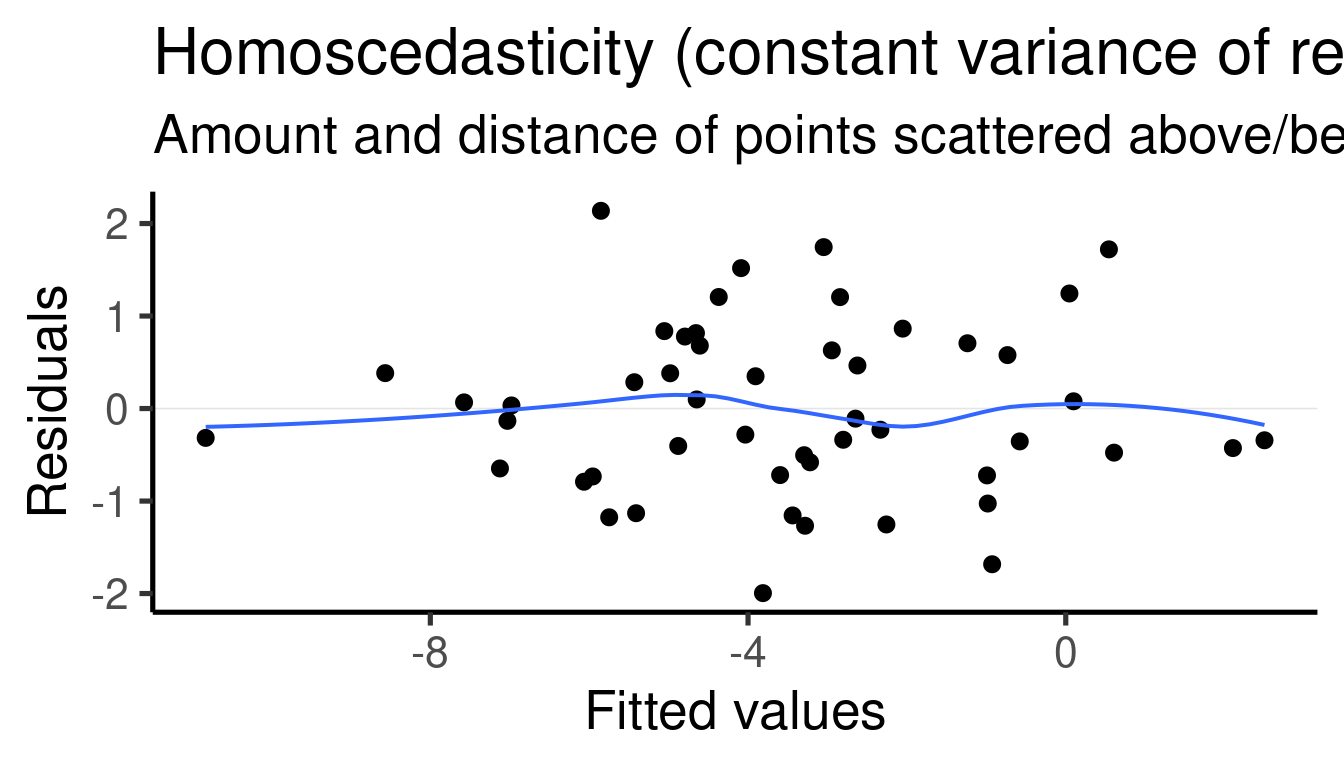

Residuals: distribution of the residuals. Residuals are the difference between what the model predicted and the actual value of y. This is a graphic representation of the residuals:

Coefficients: this one contains the effect sizes (‘Estimates’), a measure of their uncertainty (‘Std. Error’), the associated statistic (‘t value’) and p value (‘Pr(>|t|)’). Estimates are given as the mean change in y for every increase in 1 unit in x. So for this example is 1.0544 \(in / in\). Despite the fact that the units will cancel out (`1.0544 \(in / in\) = 1.0544) keeping them in mind is still biologically meaningful. They mean that, in average, and 1 inch increase in girth you will expect an increase of 1.0544 inches in height.

Residual standard error: self-explanatory. The standard error of the residuals

Multiple R-squared: the coefficient of determination, this is intended as a measurement of how well your model fits to the data

Adjusted R-Squared: similar to the ‘Multiple R-squared’ but penalized for the number of parameters

F-statistic: statistic for a global test that checks if at least one of your coefficients are non-zero

p-value: probability for a global test that checks if at least one of your coefficients are non-zero

We will use lm() to showcase the flexibility of

regression models. Regression components will be added gradually so we

can take time of understand each of them as well as the correspondent

changes in the regression output.

Response (intercept)-only model

Let’s first create a response numeric variable:

# set seed

set.seed(123)

# number of observations

n <- 50

# random variables

y <- rnorm(n = n, mean = 0, sd = 1)

# put it in a data frame

y_data <- data.frame(y)

This single variable can be input in an intercept-only

regression model. To do this we need to supply the model

formula and the data to lm():

Which is equivalent to:

We can get the default summary of the model results by running

summary() on the output object ‘y_mod’:

##

## Call:

## lm(formula = y ~ 1, data = y_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.0010 -0.5937 -0.1070 0.6638 2.1345

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.0344 0.1309 0.263 0.794

##

## Residual standard error: 0.9259 on 49 degrees of freedom

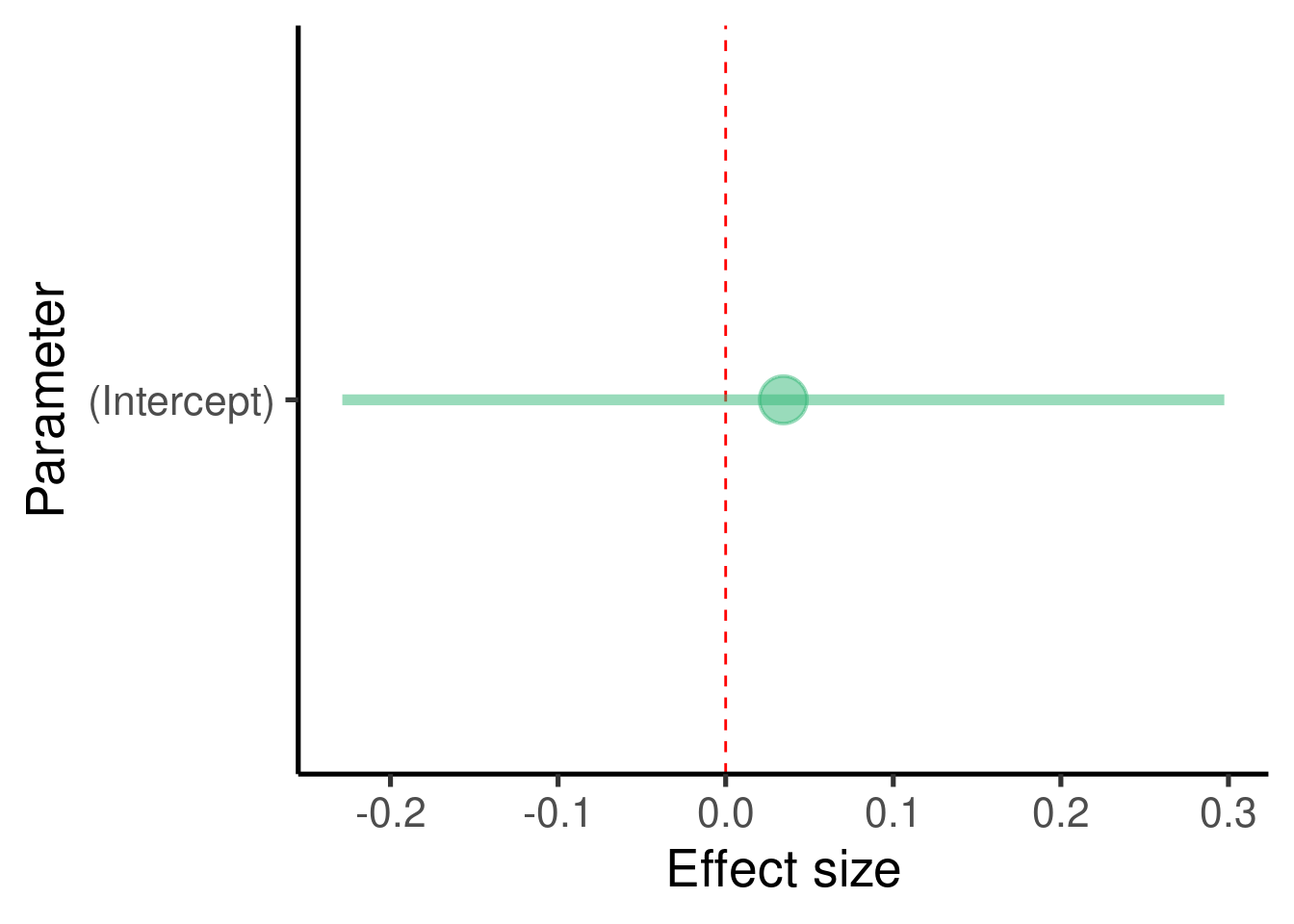

It can be quite informative to plot the effect sizes (although in this case we just have one):

ci_df <- data.frame(param = names(y_mod$coefficients), est = y_mod$coefficients,

confint(y_mod))

ggplot(ci_df, aes(x = param, y = est)) + geom_hline(yintercept = 0,

color = "red", lty = 2) + geom_pointrange(aes(ymin = X2.5.., ymax = X97.5..)) +

labs(x = "Parameter", y = "Effect size") + coord_flip()

For assessing the significance of the association we focus on the coefficients table:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.03440355 0.1309378 0.2627473 0.793847In this example there are no predictors in the model so we only got an estimate for the intercept (\(\beta_0\))

The model tell us that the intercept is estimated at 0.0344035 and that this value is not significantly different from 0 (p-value = 0.793847)

In this case the intercept is simply the mean of the response variable

## [1] 0.03440355

- We seldom have predictions about the intercept so we tend to ignore this estimate.

Exercise

Adding a non-associated predictor

We can create 2 unrelated numeric variables like this:

# set seed

set.seed(123)

# number of observations

n <- 50

# random variables

y <- rnorm(n = n, mean = 0, sd = 1)

x1 <- rnorm(n = n, mean = 0, sd = 1)

# create data frame

xy_data <- data.frame(x1, y)

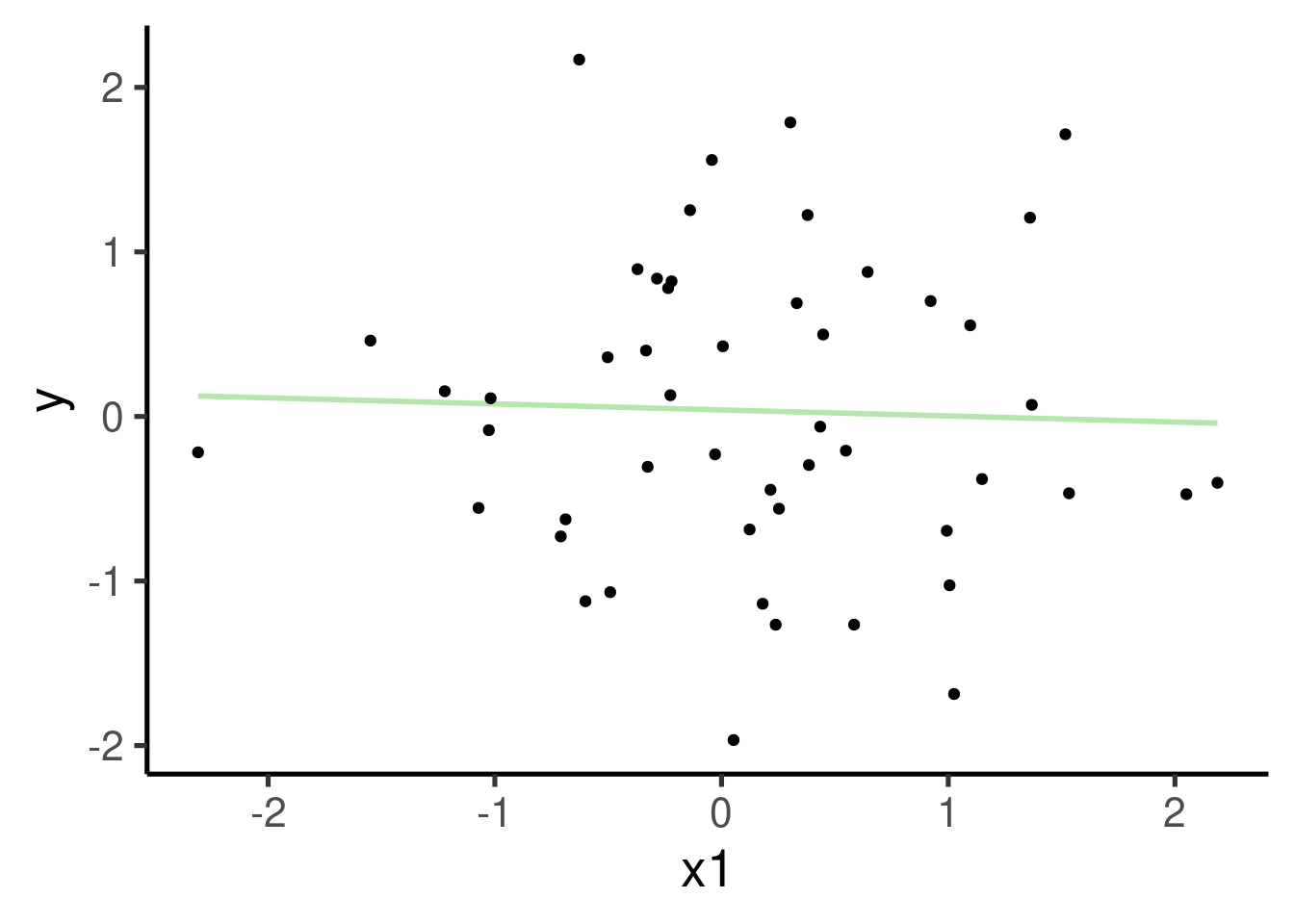

These two variables can be input in a regression model to evaluate the association between them:

# build model

xy_mod <- lm(formula = y ~ x1, data = xy_data)

# plot

ggplot(xy_data, aes(x = x1, y = y)) + geom_smooth(method = "lm", se = FALSE) +

geom_point() # plot points

Which is equivalent to:

Let’s print the summary for this model:

##

## Call:

## lm(formula = y ~ x1, data = xy_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.0044 -0.6239 -0.1233 0.6868 2.1061

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.03977 0.13396 0.297 0.768

## x1 -0.03668 0.14750 -0.249 0.805

##

## Residual standard error: 0.9349 on 48 degrees of freedom

## Multiple R-squared: 0.001287, Adjusted R-squared: -0.01952

## F-statistic: 0.06184 on 1 and 48 DF, p-value: 0.8047

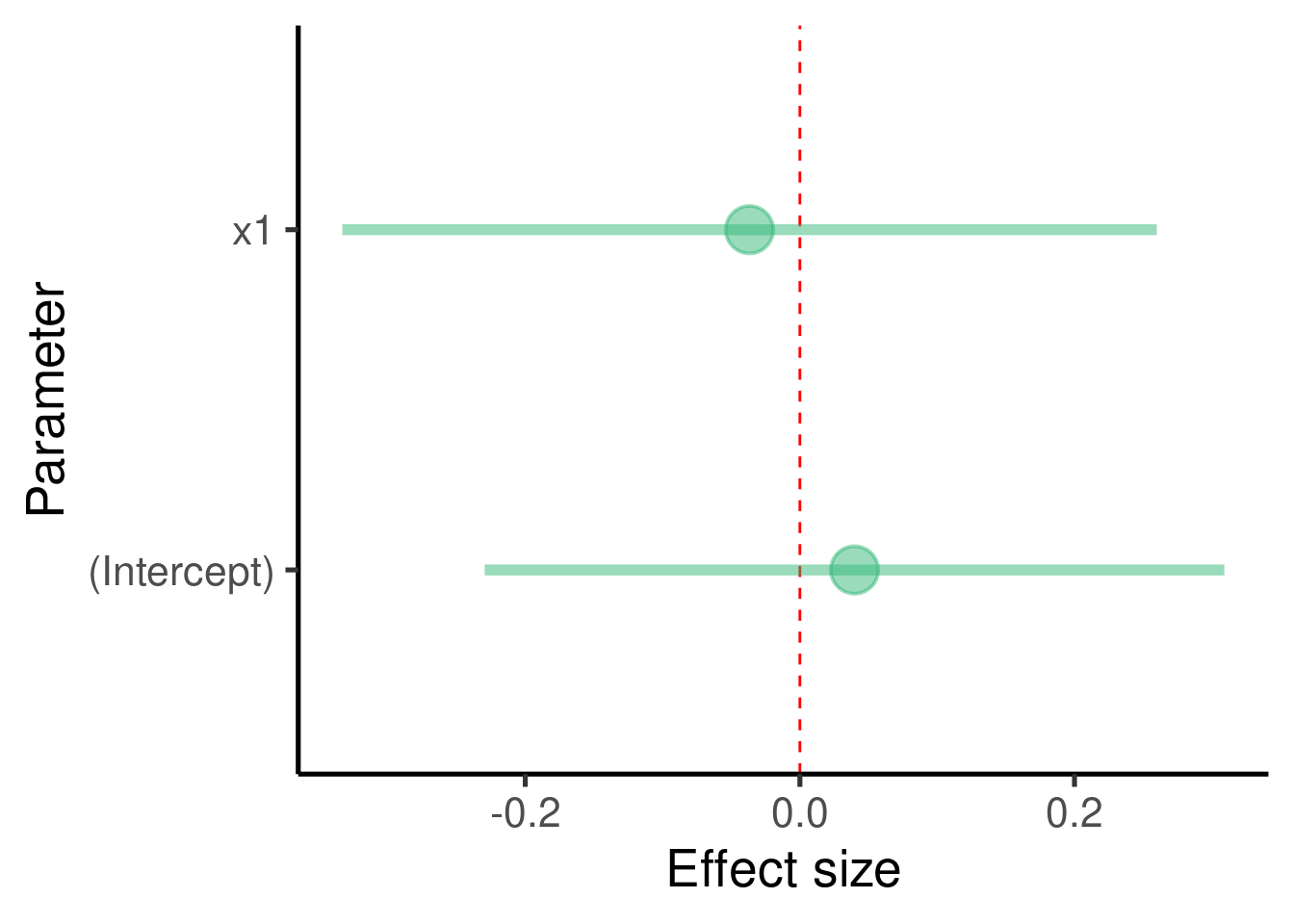

… and plot the effect sizes:

ci_df <- data.frame(param = names(xy_mod$coefficients), est = xy_mod$coefficients,

confint(xy_mod))

ggplot(ci_df, aes(x = param, y = est)) + geom_hline(yintercept = 0,

color = "red", lty = 2) + geom_pointrange(aes(ymin = X2.5.., ymax = X97.5..)) +

labs(x = "Parameter", y = "Effect size") + coord_flip()

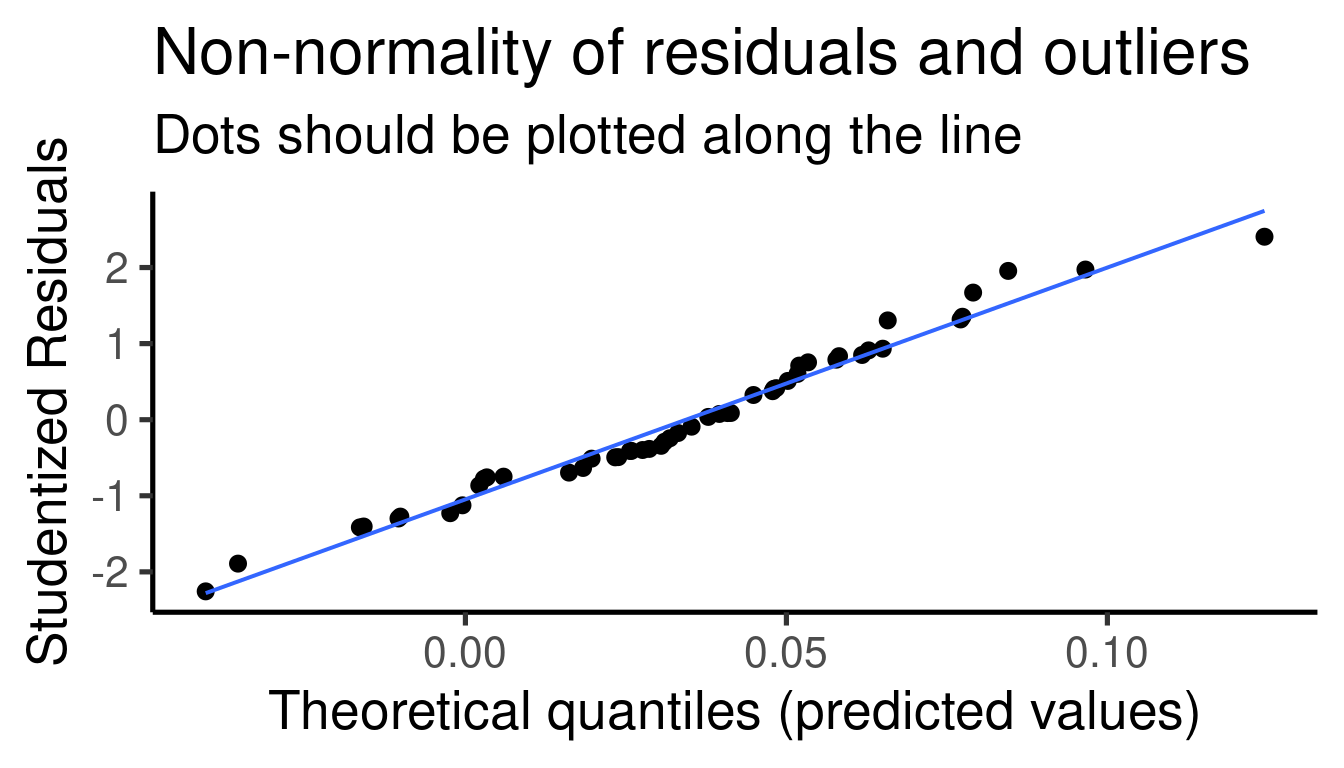

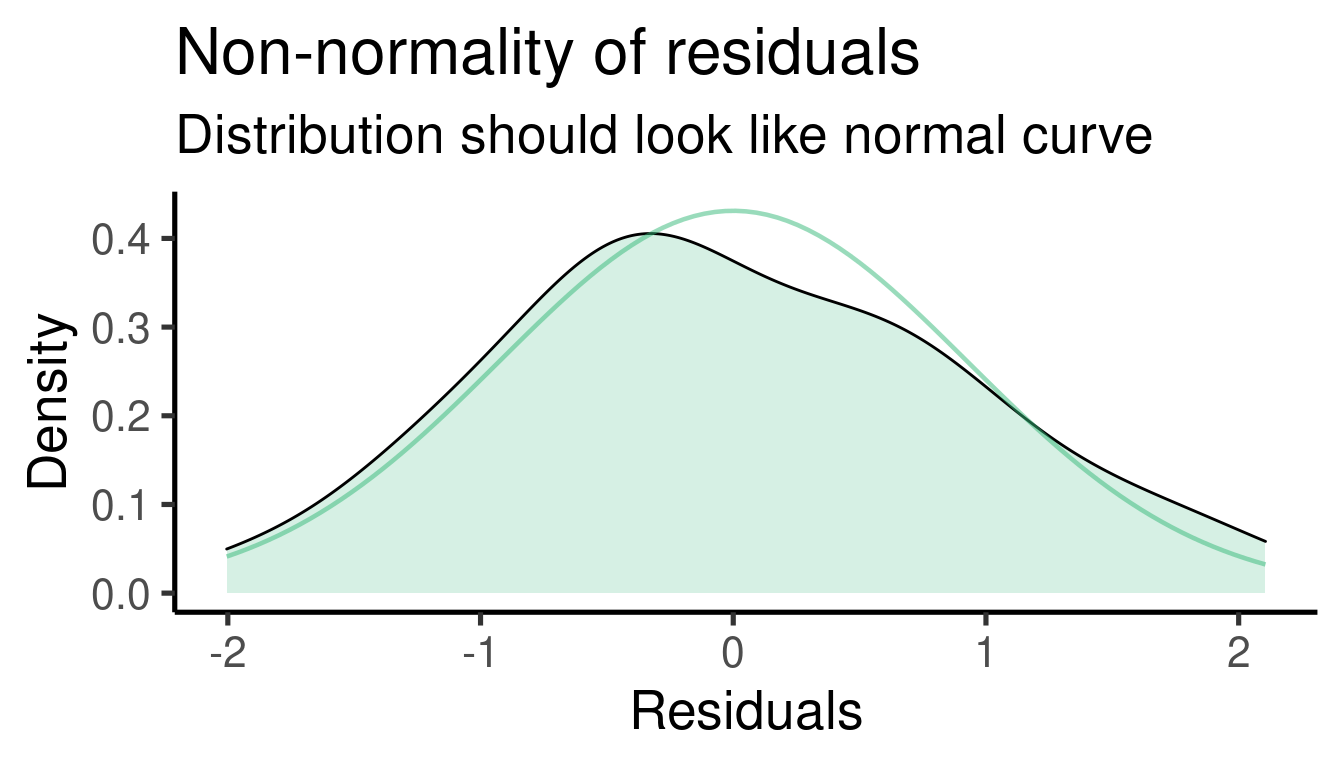

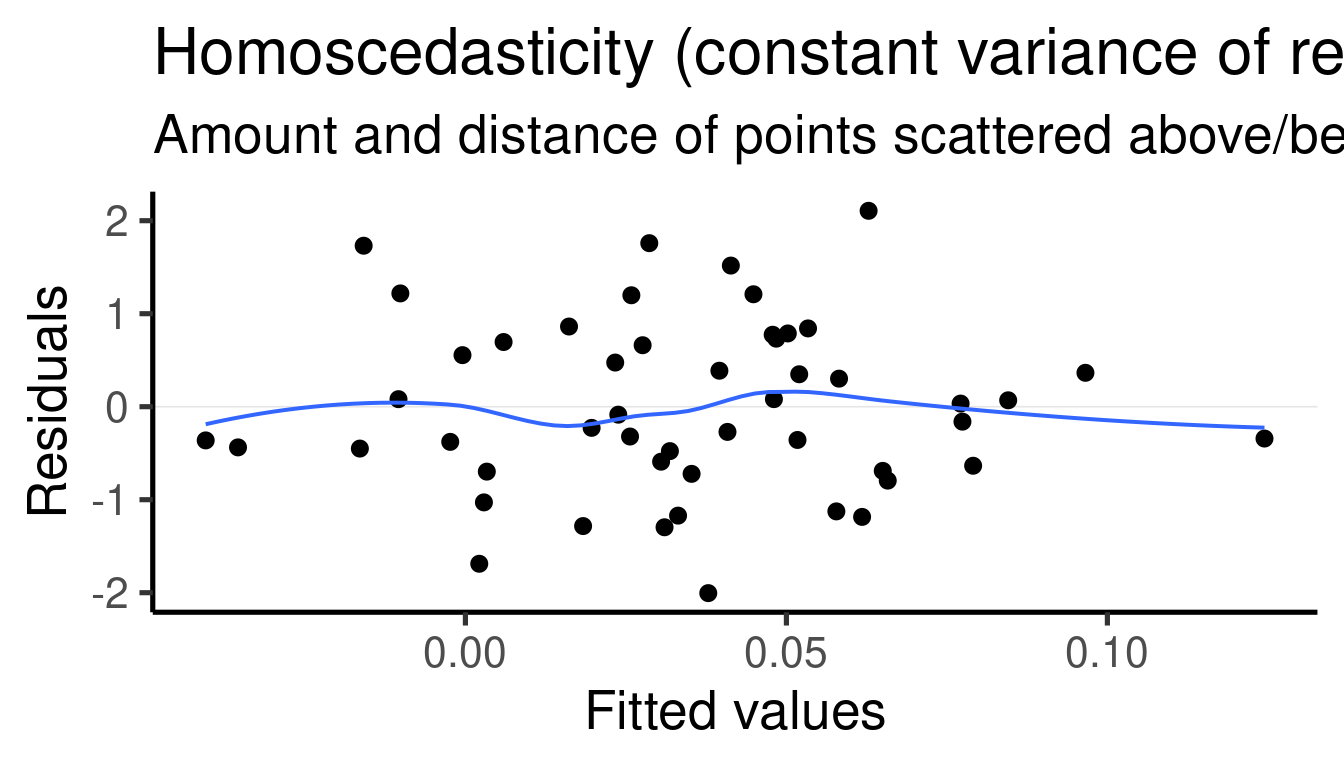

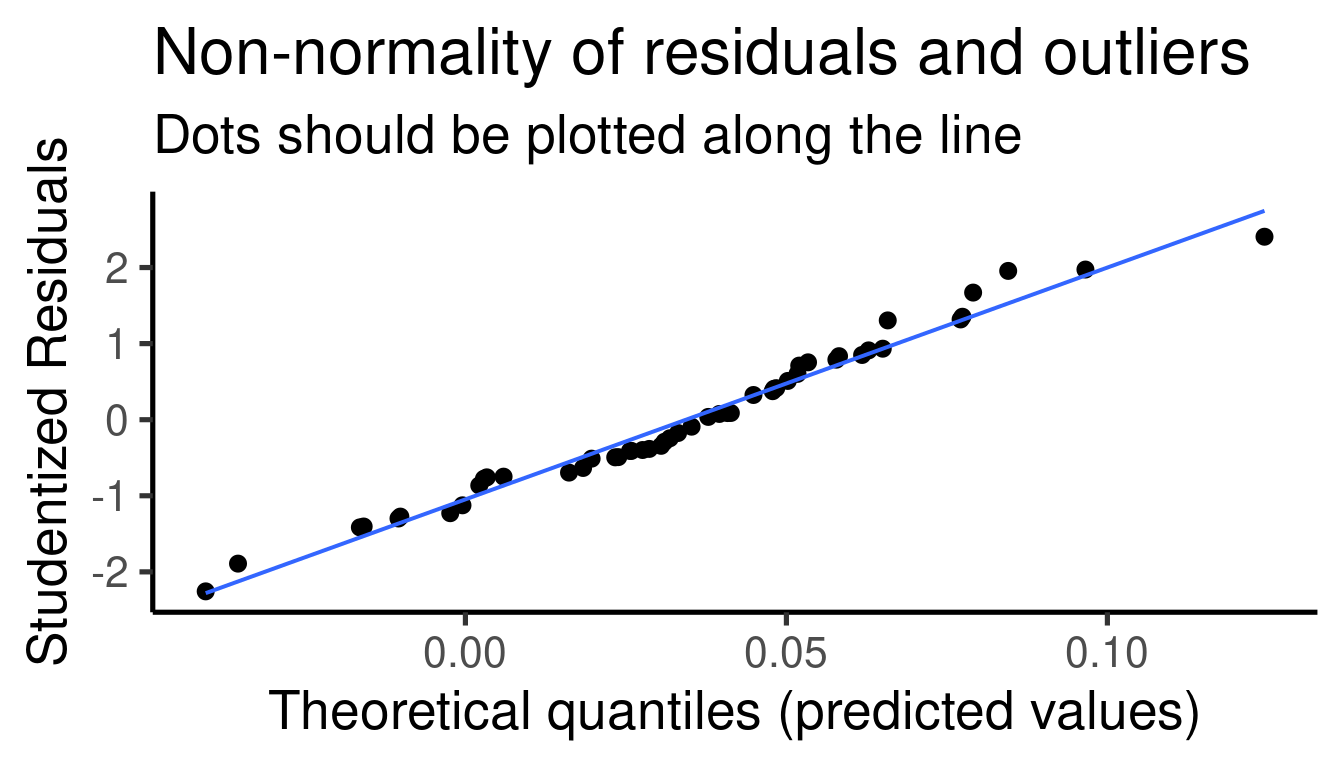

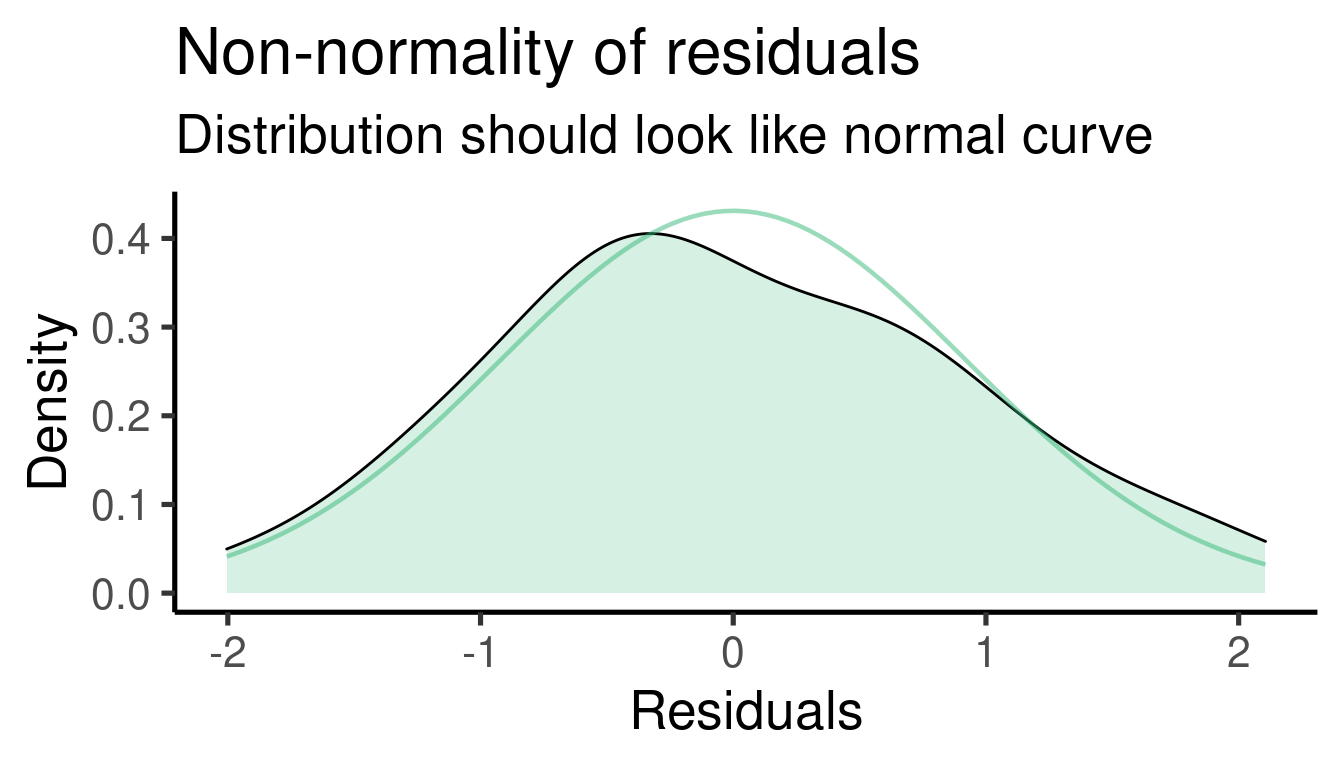

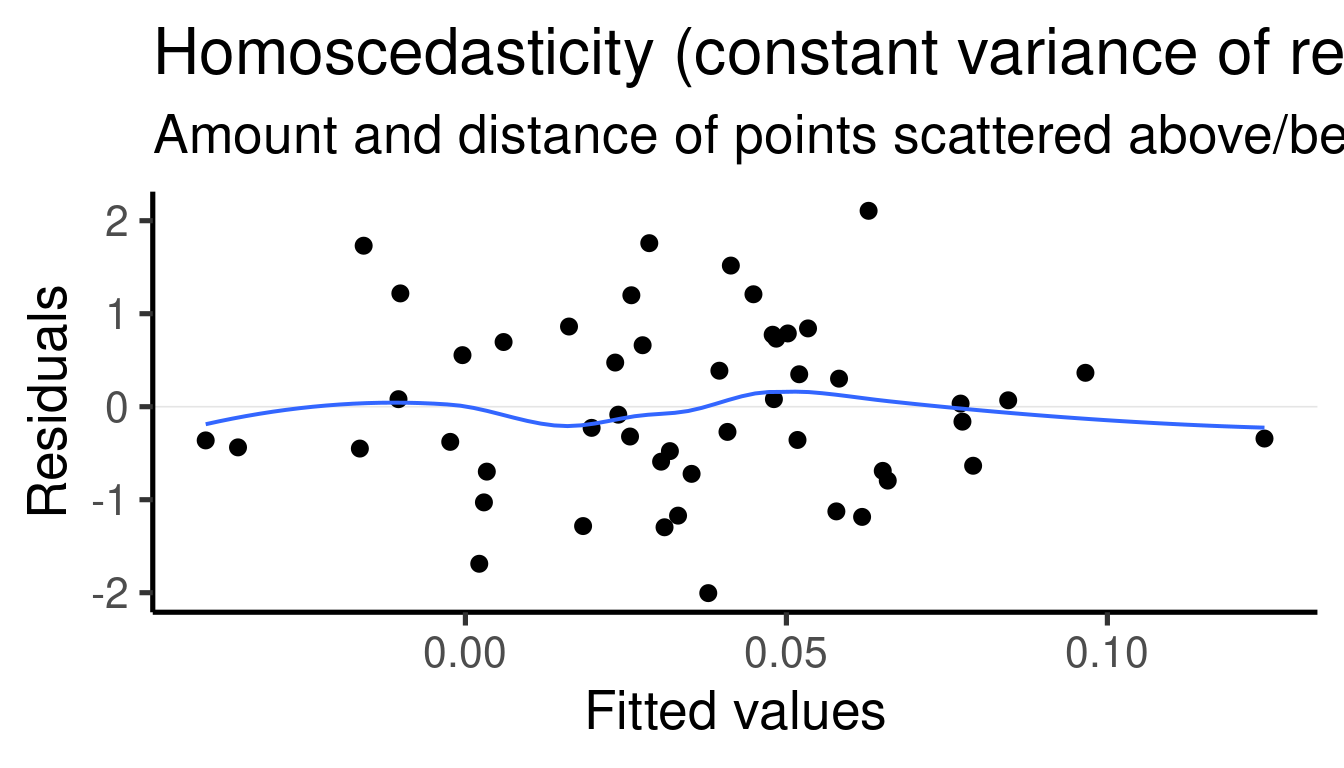

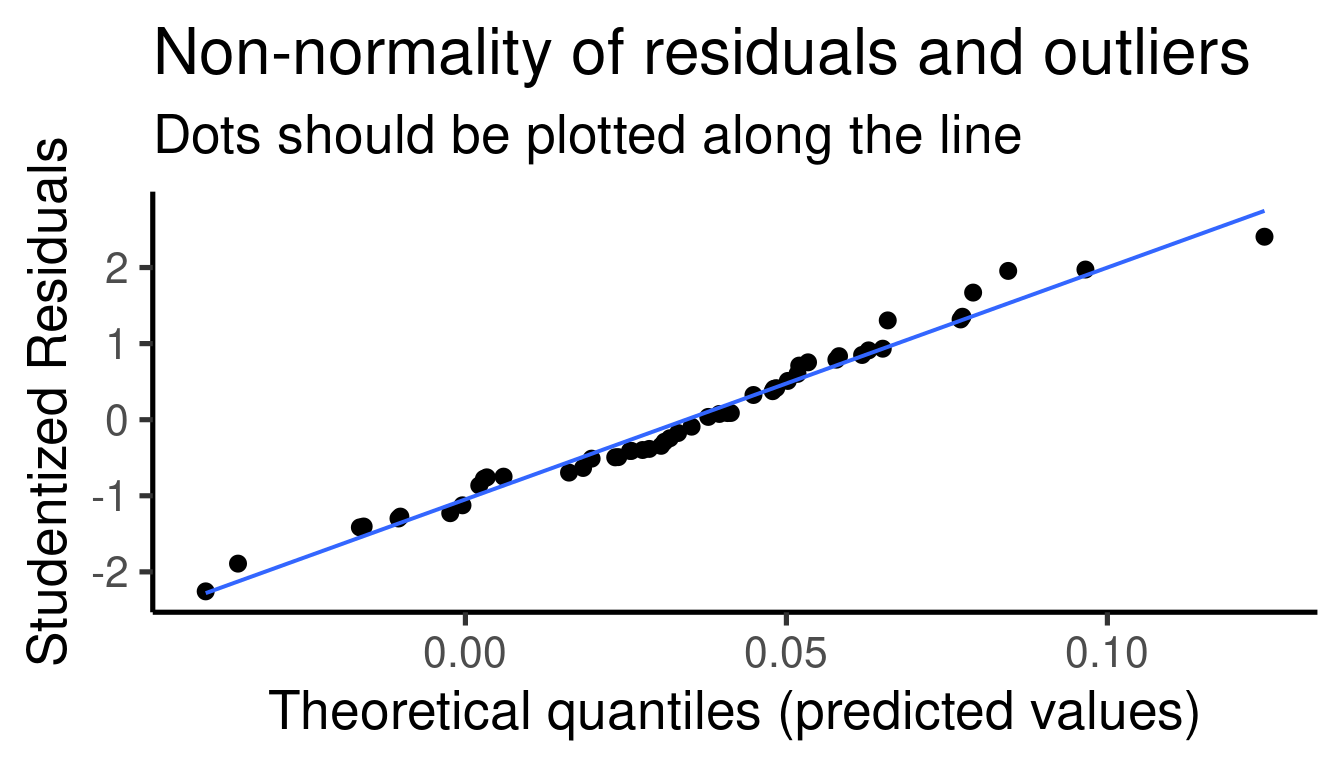

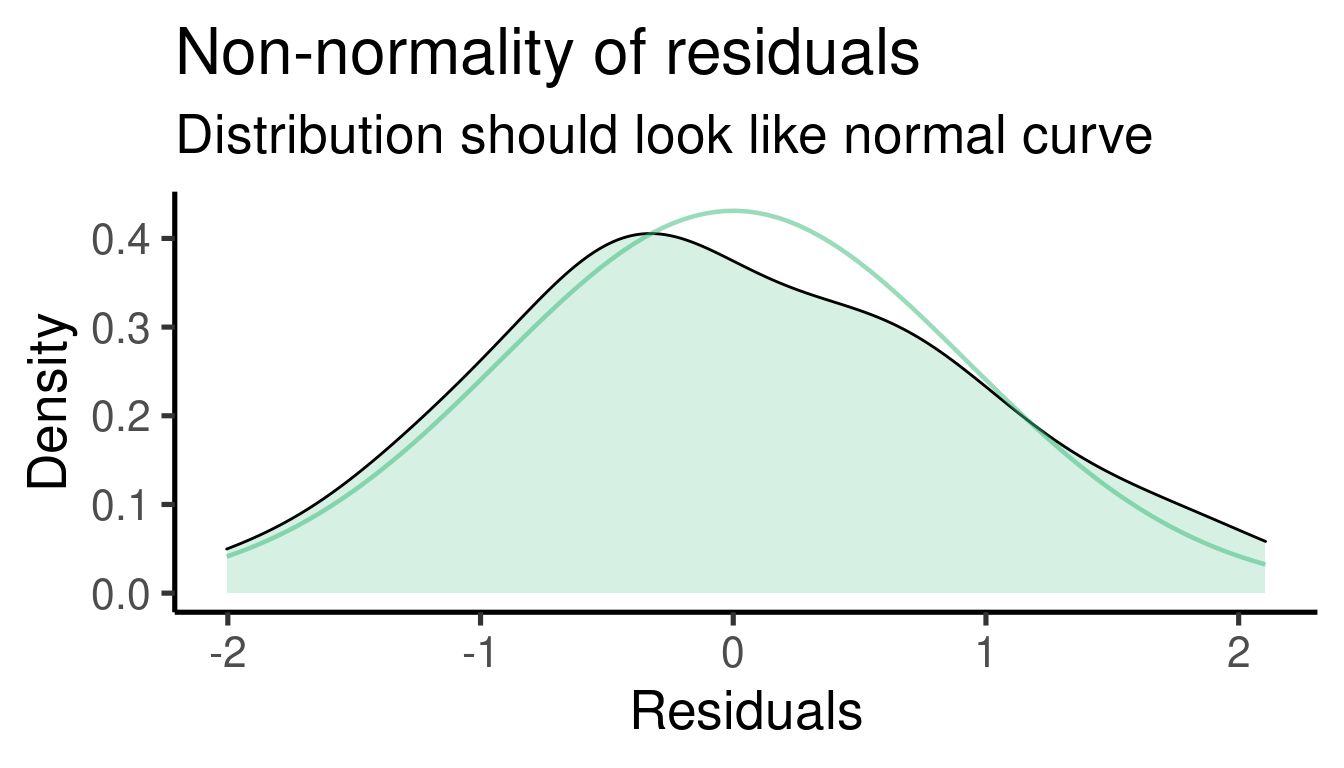

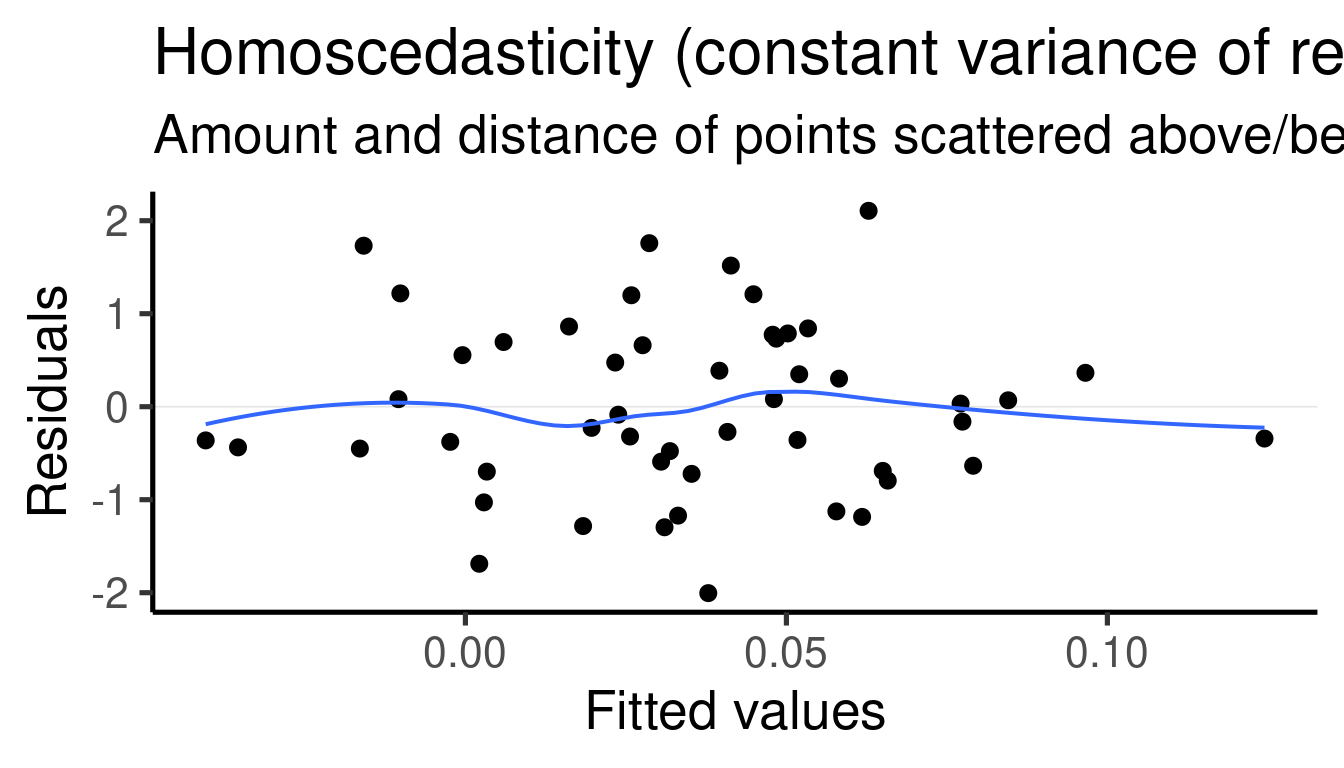

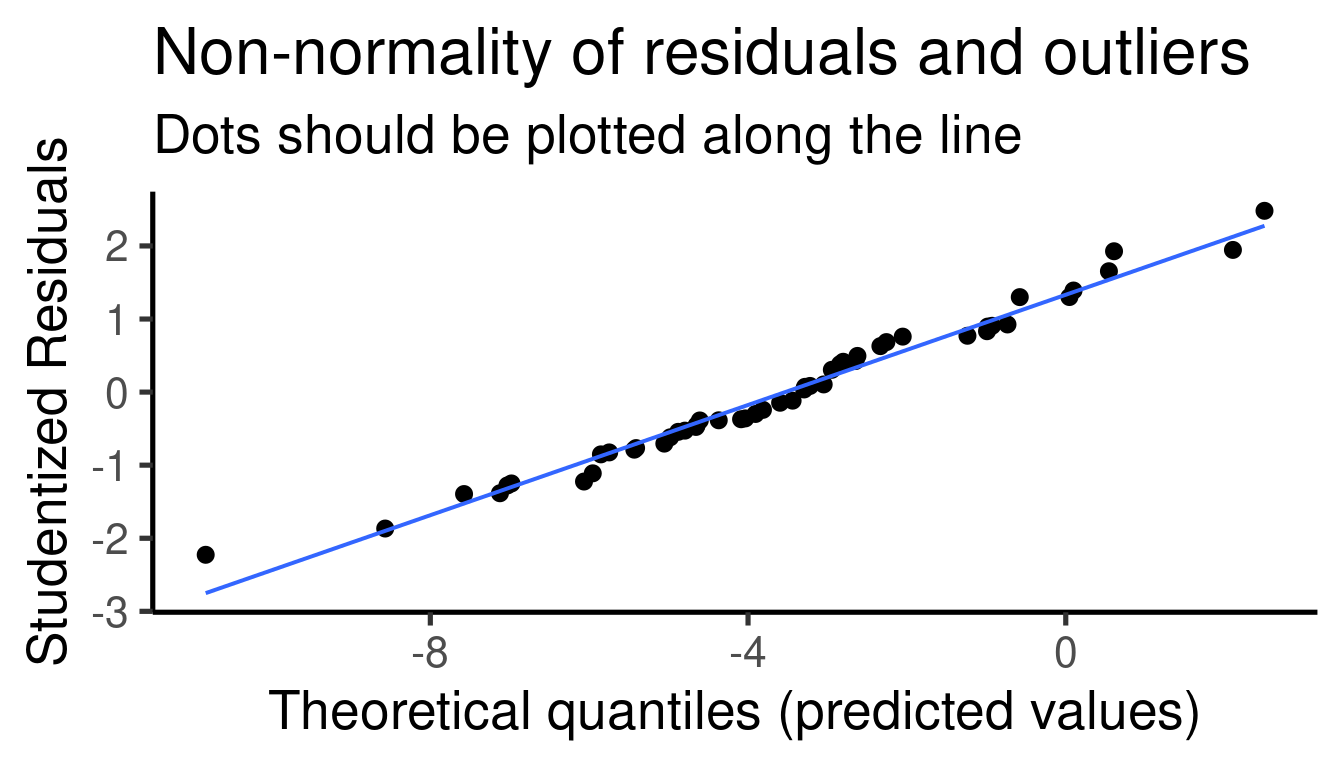

We should ‘diagnose’ the adequacy of the model by inspecting more

closely the distribution of residuals.The function

plot_model() from the package ‘sjPlot’ does a good job for

creating diagnostic plots for linear models:

## [[1]]

##

## [[2]]

##

## [[3]]

Coefficients table:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.03977364 0.1339616 0.2969033 0.7678214

## x1 -0.03667889 0.1474982 -0.2486735 0.8046743

In this example we added one predictor to the model so we got an additional estimate (and extra row, ‘x1’)

The model tell us that the estimate of ‘x1’ is -0.0366789 and that it is not significantly different from 0 (p-value = 0.8046743)

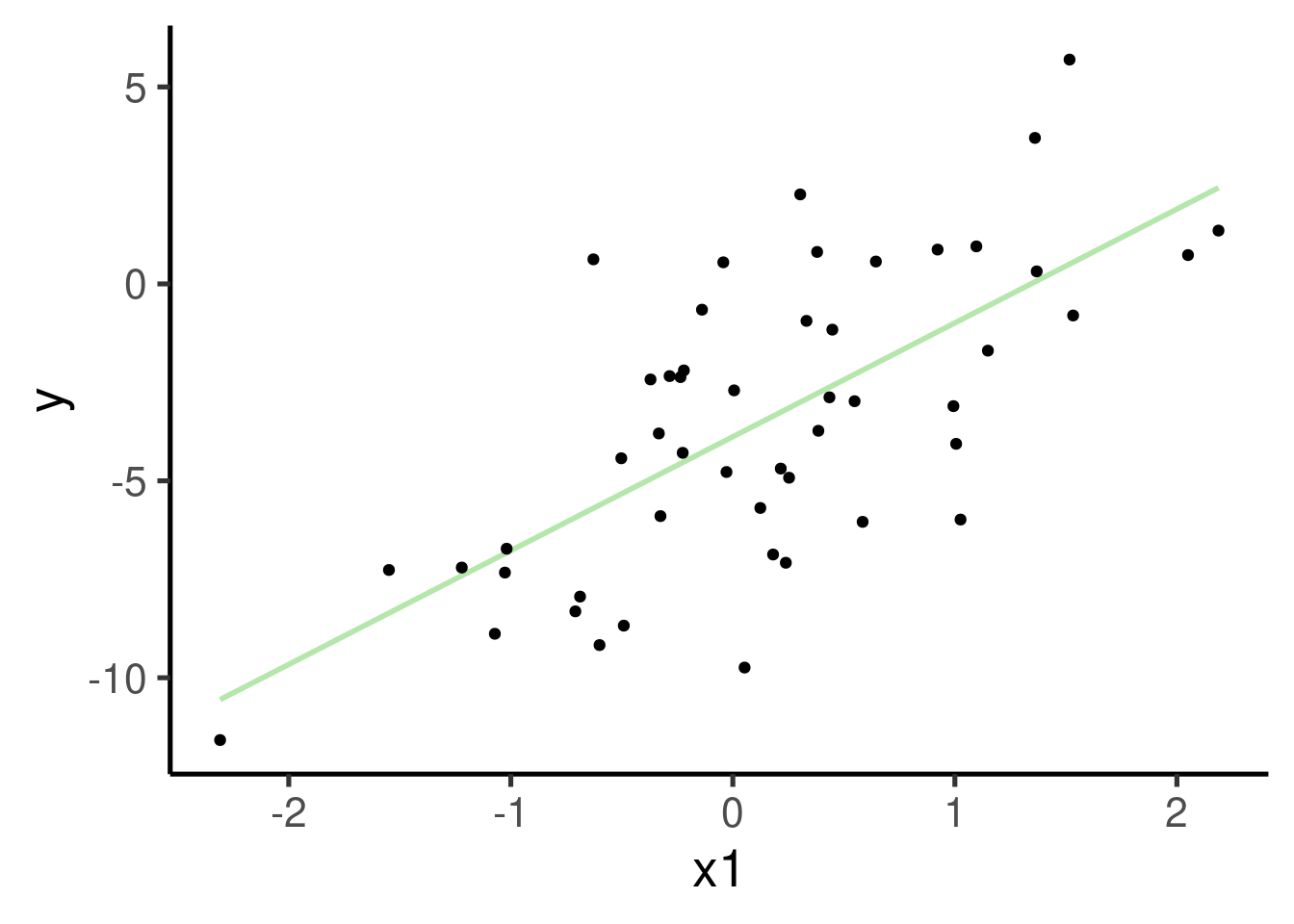

Simulating an associated predictor

We can use the linear model formula above to simulate two associated continuous variables like this:

# set seed

set.seed(123)

# number of observations

n <- 50

b0 <- -4

b1 <- 3

error <- rnorm(n = n, sd = 3)

# random variables

x1 <- rnorm(n = n, mean = 0, sd = 1)

y <- b0 + b1 * x1 + error

# create data frame

xy_data2 <- data.frame(x1, y)

Note that we also added an error term, so the association is not perfect. Let’s run the model and plot the association between the two variables:

# build model

xy_mod2 <- lm(formula = y ~ x1, data = xy_data2)

# plot

ggplot(xy_data2, aes(x = x1, y = y)) + geom_smooth(method = "lm",

se = FALSE) + geom_point() # plot points

The formula is the same than the previous model:

This is the summary of the model:

##

## Call:

## lm(formula = y ~ x1, data = xy_data2)

##

## Residuals:

## Min 1Q Median 3Q Max

## -6.0133 -1.8718 -0.3698 2.0604 6.3185

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -3.8807 0.4019 -9.656 7.86e-13 ***

## x1 2.8900 0.4425 6.531 3.85e-08 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 2.805 on 48 degrees of freedom

## Multiple R-squared: 0.4705, Adjusted R-squared: 0.4595

## F-statistic: 42.65 on 1 and 48 DF, p-value: 3.854e-08

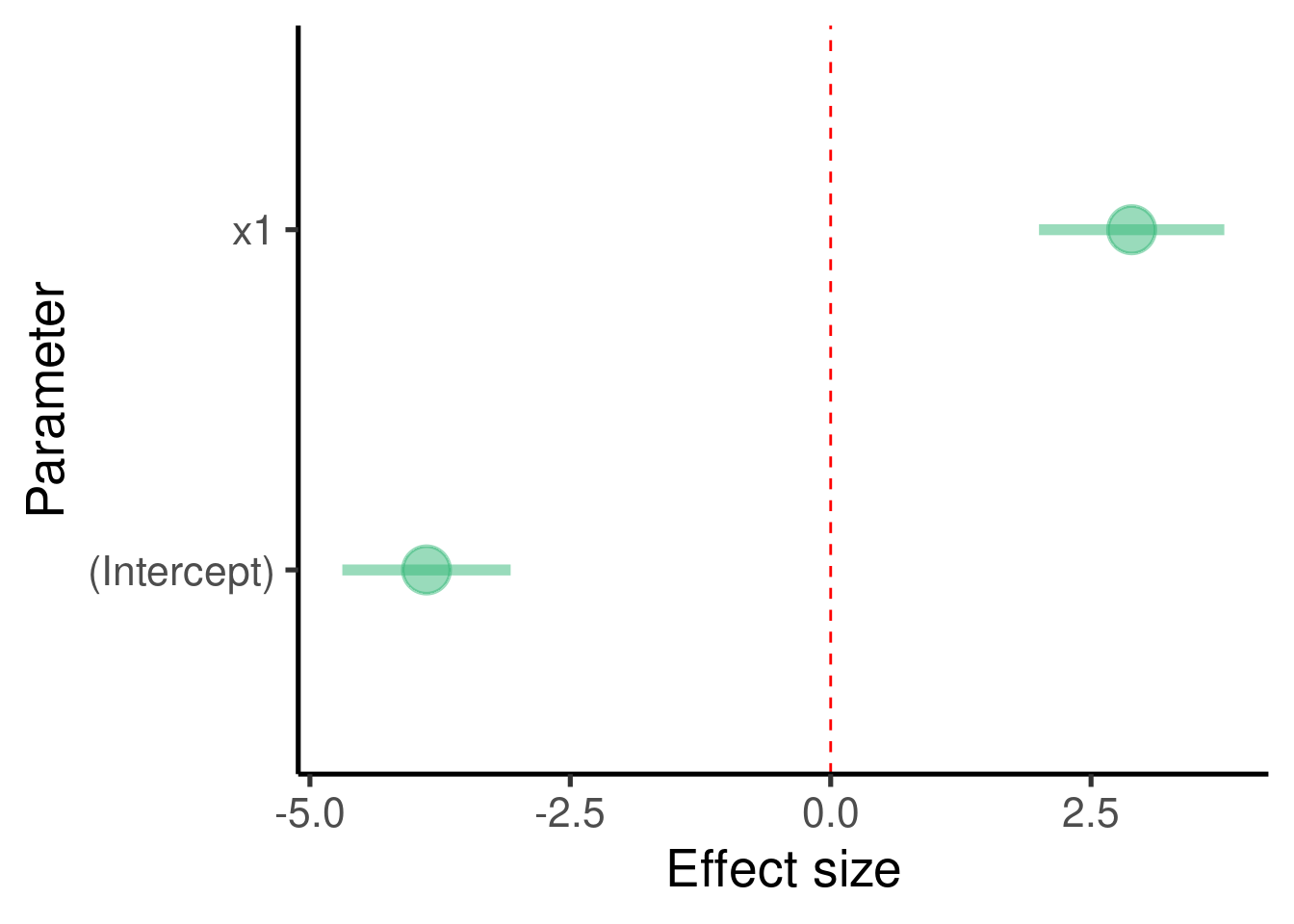

.. the effect size plot:

ci_df <- data.frame(param = names(xy_mod2$coefficients), est = xy_mod2$coefficients,

confint(xy_mod2))

ggplot(ci_df, aes(x = param, y = est)) + geom_hline(yintercept = 0,

color = "red", lty = 2) + geom_pointrange(aes(ymin = X2.5.., ymax = X97.5..)) +

labs(x = "Parameter", y = "Effect size") + coord_flip()

… and the model diagnostic plots:

## [[1]]

##

## [[2]]

##

## [[3]]

Coefficients table:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -3.880679 0.4018848 -9.656198 7.861629e-13

## x1 2.889963 0.4424946 6.531071 3.853652e-08The model tells us that \(\beta_1\) (the effect size of ‘x1’) is 2.8899633 and that it is significantly different from 0 (p-value = 3.8536524^{-8})

The simulated values for the regression parameters can be compared to the summary of the

lm()model to get a sense of the model precision:- \(\beta_1\) (the effect size of ‘x1’) was set to 3 and was estimated as 2.89 by the model

Exercise

Increase the sample size (

n) to 1000 or higherHow did the effect size (\(\beta\)) estimates change?

How did the standard error of the effect size change?

Now change

nto 15 and check again the model estimates (this time check the p-value as well)

Adding more than 1 predictor: multiple regression

Multiple linear regression is an extension of the simple linear regression model that can take several predictors:

The formula looks a bit busy, but it only means that any additional parameter will have its own estimate (\(\beta\)). The formula for a two-predictor linear regression looks like this:

.. and it can be simulated like this:

# set seed

set.seed(123)

# number of observations

n <- 50

b0 <- -4

b1 <- 3

b2 <- -2

error <- rnorm(n = n, mean = 0, sd = 3)

# random variables

x1 <- rnorm(n = n, mean = 0, sd = 1)

x2 <- rnorm(n = n, mean = 0, sd = 1)

y <- b0 + b1 * x1 + b2 * x2 + error

# create data frame

xy_data_multp <- data.frame(x1, x2, y)

# build model

xy_mod_multp <- lm(formula = y ~ x1 + x2, data = xy_data_multp)

summary(xy_mod_multp)##

## Call:

## lm(formula = y ~ x1 + x2, data = xy_data_multp)

##

## Residuals:

## Min 1Q Median 3Q Max

## -5.9861 -1.8931 -0.3633 2.0017 6.4127

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -3.8652 0.4169 -9.270 3.49e-12 ***

## x1 2.9015 0.4526 6.411 6.41e-08 ***

## x2 -1.9324 0.4142 -4.665 2.59e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 2.833 on 47 degrees of freedom

## Multiple R-squared: 0.6116, Adjusted R-squared: 0.595

## F-statistic: 37 on 2 and 47 DF, p-value: 2.234e-10

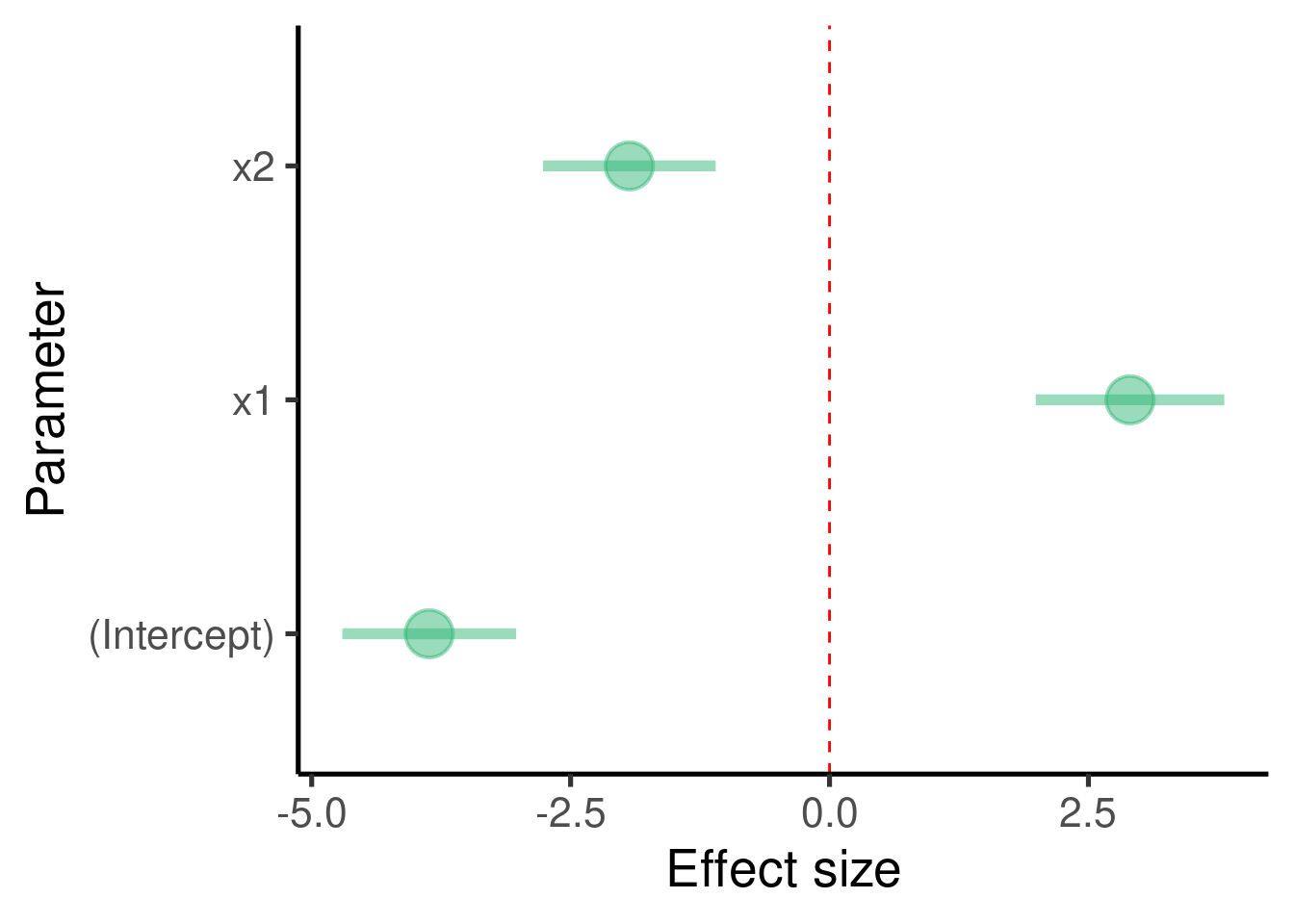

… plot the effect sizes:

ci_df <- data.frame(param = names(xy_mod_multp$coefficients), est = xy_mod_multp$coefficients,

confint(xy_mod_multp))

ggplot(ci_df, aes(x = param, y = est)) + geom_hline(yintercept = 0,

color = "red", lty = 2) + geom_pointrange(aes(ymin = X2.5.., ymax = X97.5..)) +

labs(x = "Parameter", y = "Effect size") + coord_flip()

… and the model diagnostic plots:

## [[1]]

##

## [[2]]

##

## [[3]]

Coefficients table:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -3.865192 0.4169493 -9.270172 3.485630e-12

## x1 2.901499 0.4525965 6.410785 6.413676e-08

## x2 -1.932353 0.4142204 -4.665035 2.585128e-05The model found that \(\beta_1\) (the effect size of ‘x1’) is 2.9014993 and that it is significantly different from 0 (p-value = 6.4136764^{-8})

It also found that the \(\beta_2\) (the effect size of ‘x2’) is -1.9323526 and that it is also significantly different from 0 (p-value = 2.5851282^{-5})

The simulated values for the regression parameters can be compared to the summary of the

lm()model to get a sense of the model precision:\(\beta_1\) was set to 3 and was estimated as 2.901

\(\beta_2\) (the effect size of ‘x2’) was set to -2 and was estimated as -1.932

Exercise

Set one of the effect sizes (\(\beta\)) to 0 (or very close to 0) and run again the model and its summary

How did the p-value change?

Simulate a scenario with two predictors in which only one of them is associated with the response

There is an important point to stress here: Multiple regression estimate the effect of a predictor after accounting for the effect of the other predictors in the model. In other words, new predictors in the model will attempt to explain variation in the data that was not explained by the other predictors. So the result of the multiple regression is not equivalent to the results of simple linear regressions on the same predictors. This can be easily shown by running those regressions:

# build models

x1y_mod <- lm(formula = y ~ x1, data = xy_data)

x2y_mod <- lm(formula = y ~ x2, data = xy_data)

# shortcut to coefficients

coef(xy_mod)## (Intercept) x1

## 0.03977364 -0.03667889## (Intercept) x1

## 0.03977364 -0.03667889## (Intercept) x2

## 0.04131955 0.02723902

The estimates for the same variables vary considerably between the multiple regression and the single predictor regressions.

This point is further demonstrated by the fact that, if one of the predictors has no influence at all on the response, the effect of the additional predictor will converge to its effect in a simple linear regression. To simulate this scenario we set b2 to 0:

# set seed

set.seed(123)

# number of observations

n <- 50

b0 <- -4

b1 <- 3

b2 <- 0

error <- rnorm(n = n, mean = 0, sd = 1)

# random variables

x1 <- rnorm(n = n, mean = 0, sd = 1)

x2 <- rnorm(n = n, mean = 0, sd = 1)

y <- b0 + b1 * x1 + b2 * x2 + error

# create data frame

xy_data <- data.frame(x1, x2, y)

# build model

xy_mod <- lm(formula = y ~ x1 + x2, data = xy_data)

x1y_mod <- lm(formula = y ~ x1, data = xy_data)

# shortcut to coefficients

coef(xy_mod)## (Intercept) x1 x2

## -3.95506411 2.96716643 0.02254914## (Intercept) x1

## -3.960226 2.963321The estimate for \(\beta_1\) was almost the same in the multiple regression (2.9671664) and the single predictor regression (2.9633211)

For convenience we used coef() to extract only the

estimates from the regression, but the values are the same we get with

summary(model).

Having a categorical predictor

For categorical predictors we can first create a binary (0, 1) variable and then add labels to each value:

# set seed

set.seed(13)

# number of observations

n <- 50

b0 <- -3

b1 <- 2

error <- rnorm(n = n, mean = 0, sd = 3)

# random variables

x1_num <- sample(0:1, size = n, replace = TRUE)

y <- b0 + b1 * x1_num + error

x1 <- factor(x1_num, labels = c("a", "b"))

# create data frame

xy_data_cat <- data.frame(x1, x1_num, y)

head(xy_data_cat)| x1 | x1_num | y |

|---|---|---|

| b | 1 | 0.6630 |

| a | 0 | -3.8408 |

| a | 0 | 2.3255 |

| b | 1 | -0.4380 |

| a | 0 | 0.4276 |

| a | 0 | -1.7534 |

And this is how it is formally written:

Same thing as with continuous predictors.

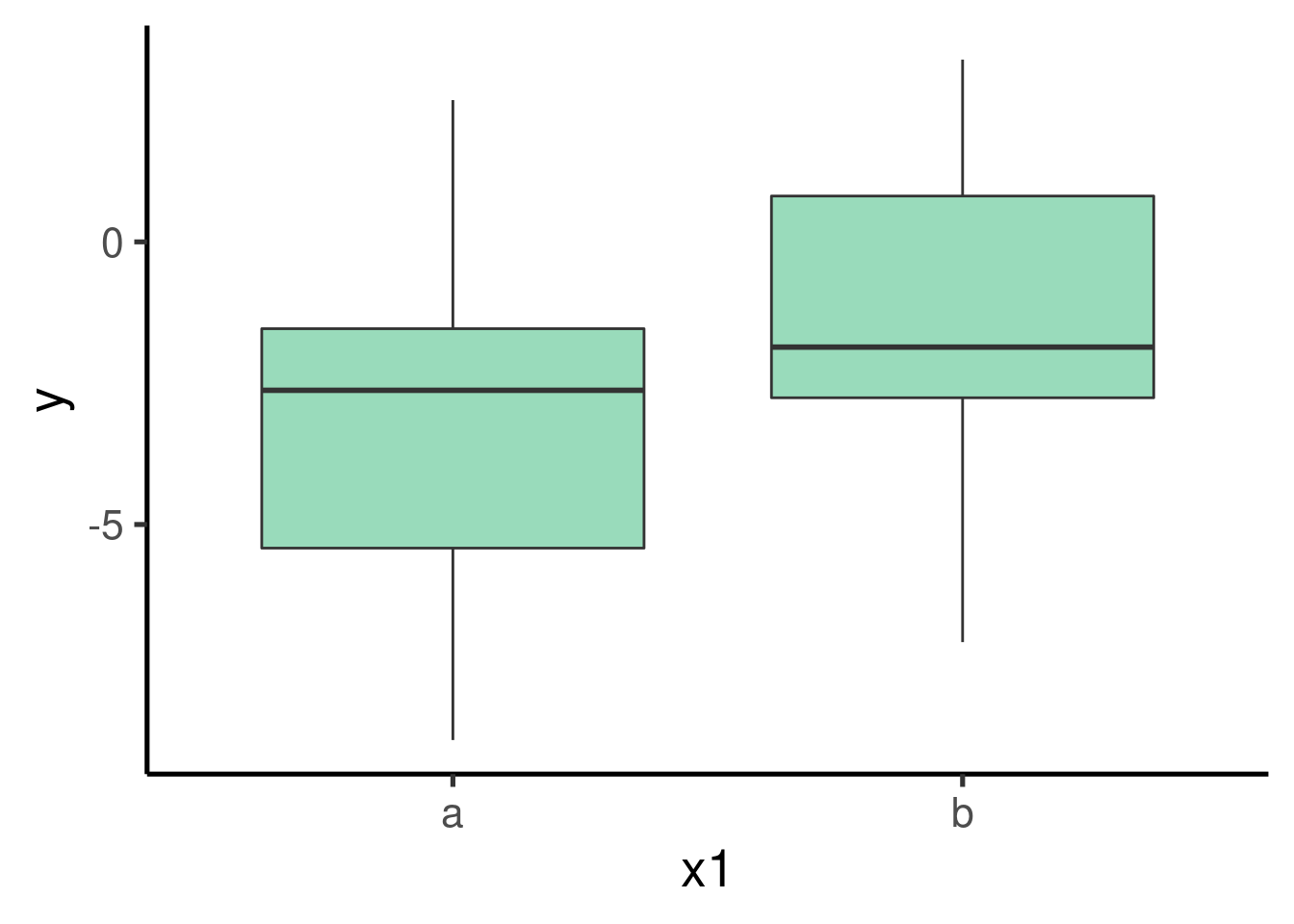

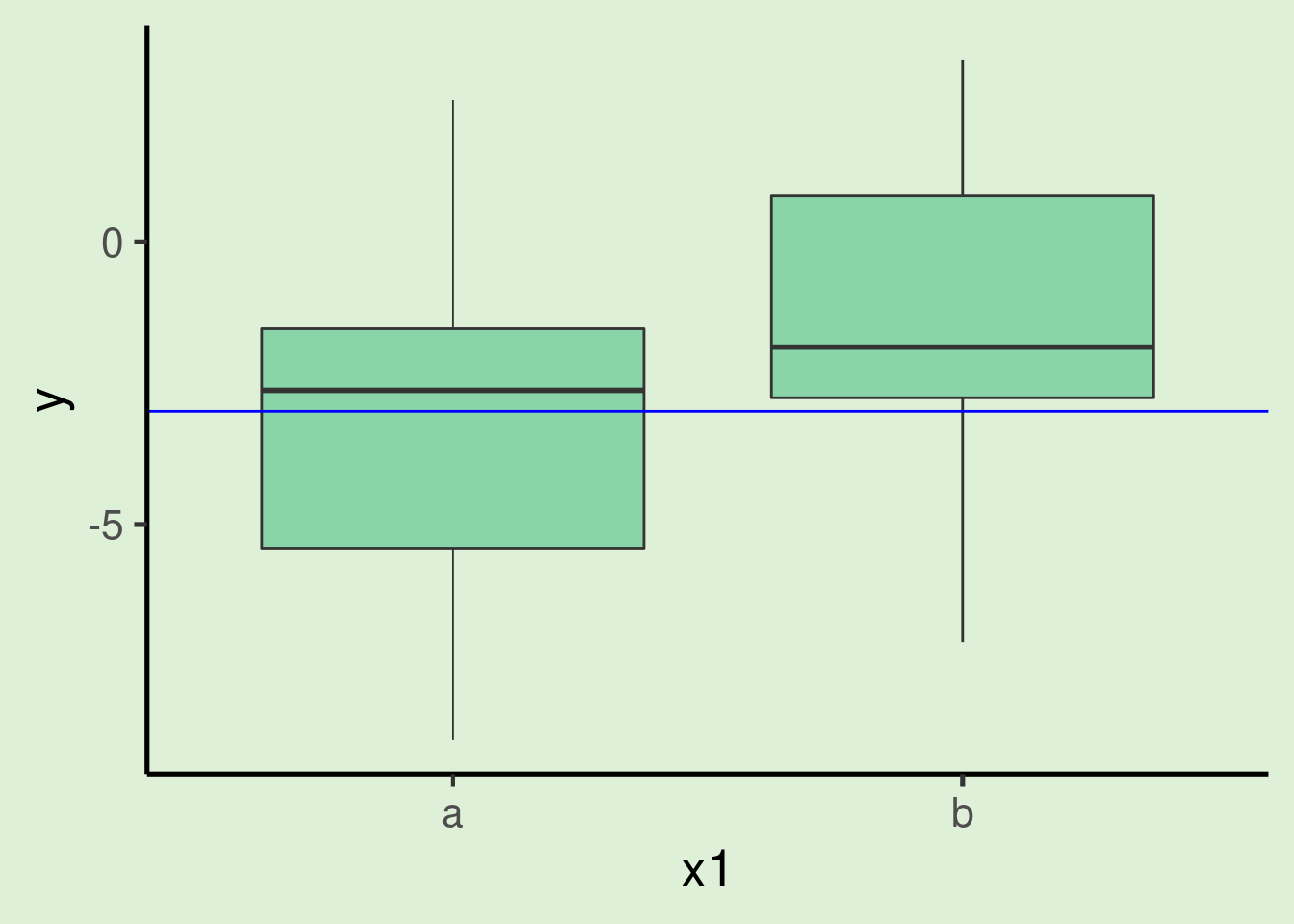

We can explore the pattern in the data using a boxplot:

… and get the estimates of the model:

##

## Call:

## lm(formula = y ~ x1, data = xy_data_cat)

##

## Residuals:

## Min 1Q Median 3Q Max

## -5.8977 -1.9092 -0.0936 1.8090 5.5059

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -2.9974 0.5583 -5.369 2.27e-06 ***

## x1b 1.8140 0.8416 2.155 0.0362 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 2.954 on 48 degrees of freedom

## Multiple R-squared: 0.08825, Adjusted R-squared: 0.06925

## F-statistic: 4.646 on 1 and 48 DF, p-value: 0.03617

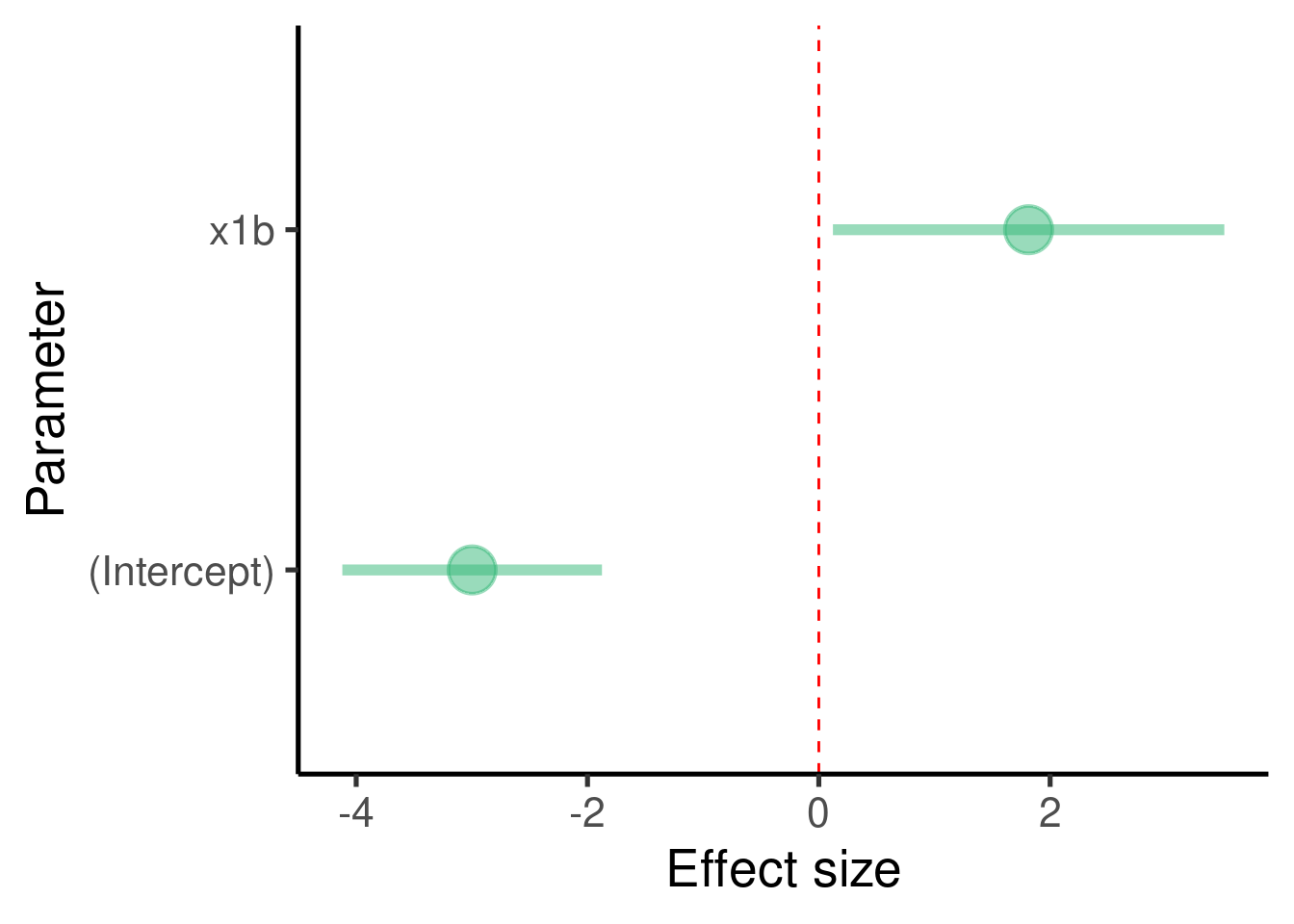

… plot the effect sizes:

ci_df <- data.frame(param = names(xy_mod_cat$coefficients), est = xy_mod_cat$coefficients,

confint(xy_mod_cat))

ggplot(ci_df, aes(x = param, y = est)) + geom_hline(yintercept = 0,

color = "red", lty = 2) + geom_pointrange(aes(ymin = X2.5.., ymax = X97.5..)) +

labs(x = "Parameter", y = "Effect size") + coord_flip()

… and the model diagnostic plots:

Coefficients table:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -2.997415 0.5582513 -5.369293 2.267724e-06

## x1b 1.813997 0.8415955 2.155426 3.617227e-02The model found that \(\beta_1\) (the effect size of ‘x1’) is 1.8139973 and that it is significantly different from 0 (p-value = 0.0361723)

The simulated values for the regression parameters can be compared to the summary of the

lm()model to get a sense of the model precision:- \(\beta_1\) was set to 2 and was estimated as 1.814

Note that in this case the intercept refers to the estimate for the level ‘a’ in the categorical predictor, which was used as a baseline:

# plot

ggplot(xy_data_cat, aes(x = x1, y = y)) + geom_boxplot() + geom_hline(yintercept = xy_mod_cat$coefficients[1],

col = "blue")

- Hence the intercept is the same as the mean of y for the category ‘a’:

## [1] -2.997415- Note also that the estimate label is ‘x1b’, not ‘x1’ as in the continuous predictors. This is because in this case the estimate refers to the difference between the two levels of the categorical variable (‘a’ and ‘b’). More specifically, it tells us that in average observations from category ‘b’ are 1.814 higher than observations in category ‘a’.

Exercise

- Unbalanced data when having categories (i.e. some categories have way more observations than others) can be problematic for statistical inference. Modify the code above to simulate a highly unbalanced data set and check the precision of the model.

In a regression model categorical predictors are also represented as numeric vectors. More precisely, categorical predictors are coded as 0s and 1s, in which 1 means ‘belongs to the same category’ and 0 ‘belongs to a different category’. We kept the original numeric vector (‘x1_num’) when simulating the data set with the categorical predictor:

| x1 | x1_num | y |

|---|---|---|

| b | 1 | 0.6630 |

| a | 0 | -3.8408 |

| a | 0 | 2.3255 |

| b | 1 | -0.4380 |

| a | 0 | 0.4276 |

| a | 0 | -1.7534 |

Note that ‘b’s in the ’x1’ column are converted into 1 in the ‘x1_num’ column and ’a’s converted into 0. This is called an indicator variable and the process is known as dummy coding.

We can actually use the numeric vector in the regression model and get the exact same results:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -2.997415 0.5582513 -5.369293 2.267724e-06

## x1b 1.813997 0.8415955 2.155426 3.617227e-02# build model with dummy variable

xy_mod_num <- lm(formula = y ~ x1_num, data = xy_data_cat)

# summary with dummy coding

summary(xy_mod_num)$coefficients## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -2.997415 0.5582513 -5.369293 2.267724e-06

## x1_num 1.813997 0.8415955 2.155426 3.617227e-02

Things get a bit more complicated when dummy coding a categorical predictor with more than two levels. But the logic is the same.

Interaction terms

A statistical interaction refers to an effect of a response variable that is mediated by a second variable.

This is easier to understand by looking at the interaction of a continuous and a binary variable:

# set seed

set.seed(123)

# number of observations

n <- 50

b0 <- -4

b1 <- 3

b2 <- 1.7

b3 <- -3

error <- rnorm(n = n, mean = 0, sd = 3)

# random variables

x1 <- rbinom(n = n, size = 1, prob = 0.5)

x2 <- rnorm(n = n, mean = 0, sd = 1)

# interaction is added as the product of x1 and x2

y <- b0 + b1 * x1 + b2 * x2 + b3 * x1 * x2 + error

x1 <- factor(x1, labels = c("a", "b"))

# create data frame

xy_data_intr <- data.frame(x1, x2, y)

head(xy_data_intr)| x1 | x2 | y |

|---|---|---|

| b | 1.0256 | -4.0147 |

| a | -0.2848 | -5.1746 |

| a | -1.2207 | -1.3991 |

| b | 0.1813 | -1.0242 |

| a | -0.1389 | -3.8483 |

| b | 0.0058 | 4.1377 |

# build model

xy_mod_intr <- lm(formula = y ~ x1 + x2 + x1 * x2, data = xy_data_intr)

# save summary to make best fit lines

xy_summ_intr <- summary(xy_mod_intr)

xy_summ_intr##

## Call:

## lm(formula = y ~ x1 + x2 + x1 * x2, data = xy_data_intr)

##

## Residuals:

## Min 1Q Median 3Q Max

## -6.2220 -1.6639 -0.1575 1.6503 6.3832

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -4.1930 0.5702 -7.353 2.70e-09 ***

## x1b 3.5974 0.8061 4.463 5.19e-05 ***

## x2 1.3274 0.6816 1.947 0.05761 .

## x1b:x2 -2.9720 0.9623 -3.089 0.00341 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 2.828 on 46 degrees of freedom

## Multiple R-squared: 0.3959, Adjusted R-squared: 0.3565

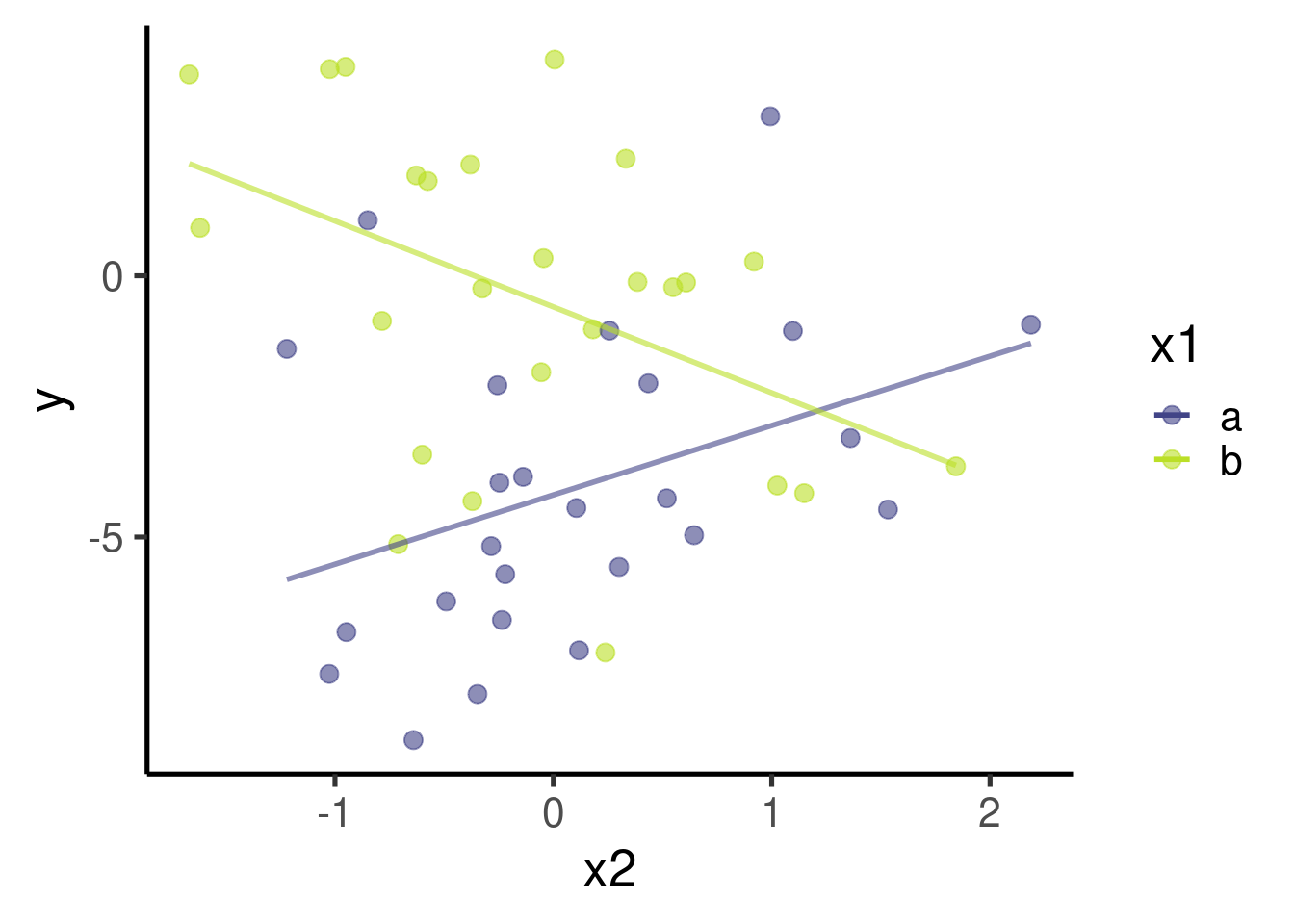

## F-statistic: 10.05 on 3 and 46 DF, p-value: 3.291e-05It also helps to plot the data (don’t worry too much about all the code):

# plot

ggplot(data = xy_data_intr, aes(x = x2, y = y, color = x1)) + geom_point(size = 3) +

geom_smooth(method = "lm", se = FALSE)

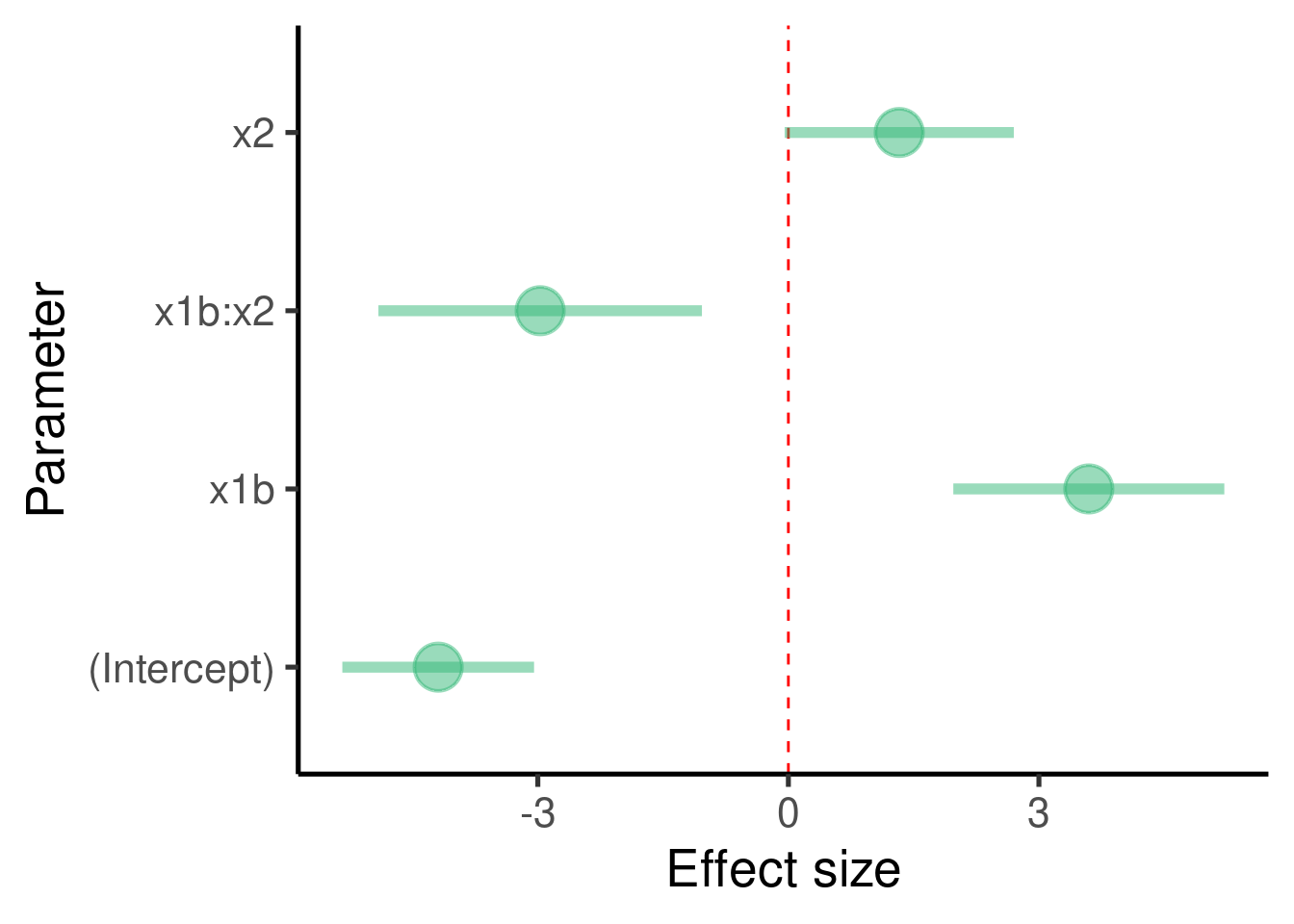

… and the effect sizes:

ci_df <- data.frame(param = names(xy_mod_intr$coefficients), est = xy_mod_intr$coefficients,

confint(xy_mod_intr))

ggplot(ci_df, aes(x = param, y = est)) + geom_hline(yintercept = 0,

color = "red", lty = 2) + geom_pointrange(aes(ymin = X2.5.., ymax = X97.5..)) +

labs(x = "Parameter", y = "Effect size") + coord_flip()

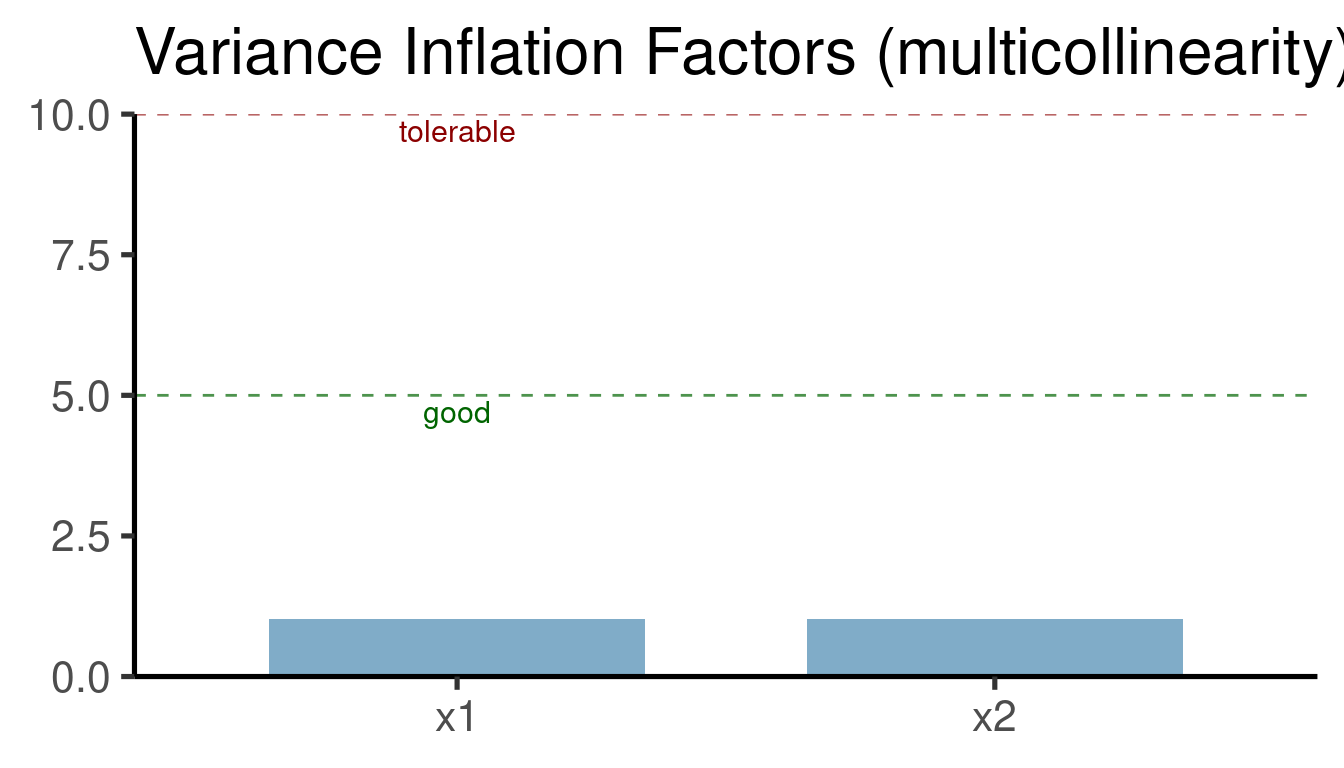

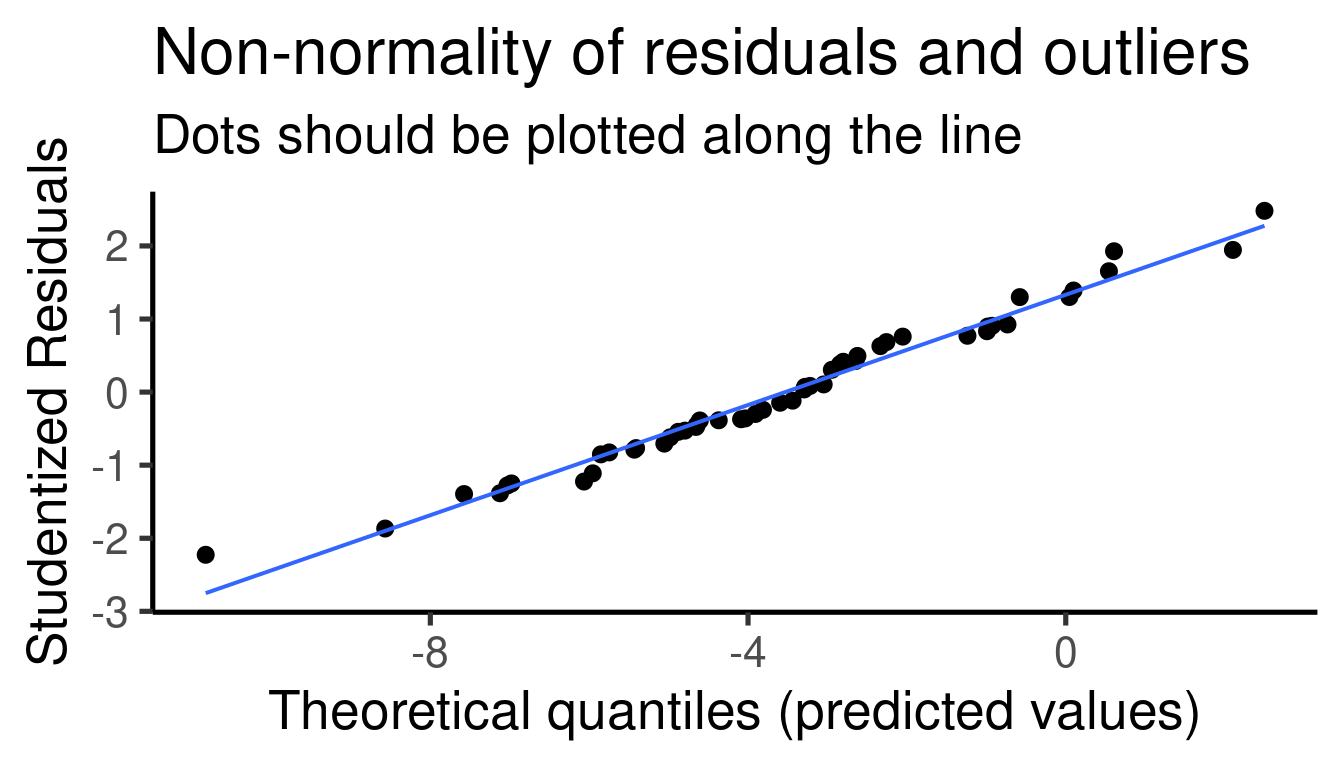

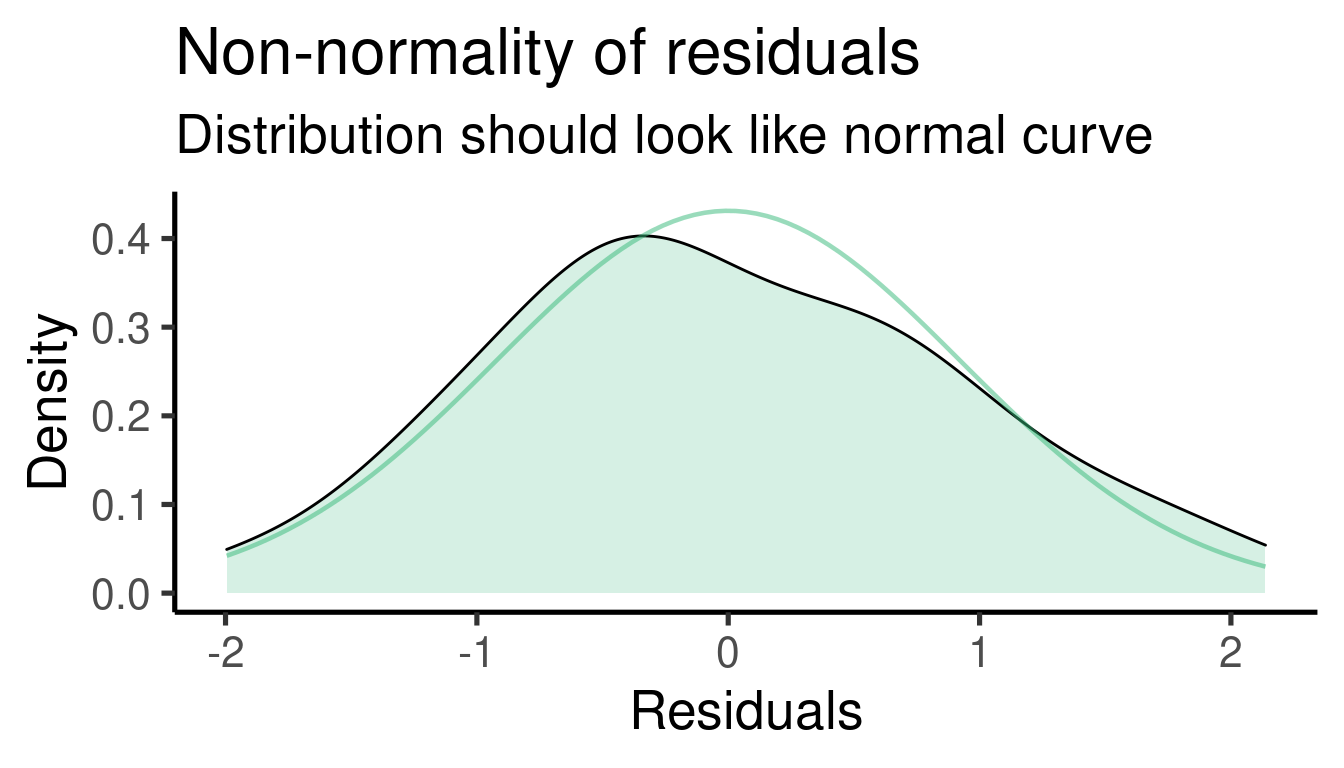

We should also check the diagnostic plots:

## [[1]]

##

## [[2]]

##

## [[3]]

##

## [[4]]

Coefficients table:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -4.193018 0.5702328 -7.353168 2.697816e-09

## x1b 3.597411 0.8060688 4.462908 5.194964e-05

## x2 1.327353 0.6816129 1.947370 5.760963e-02

## x1b:x2 -2.971961 0.9622652 -3.088505 3.406062e-03The model found that \(\beta_1\) (the effect size of ‘x1-b’ to ‘x1-a’) is 3.5974112 and that it is significantly different from 0 (p-value = 5.1949639^{-5})

The model found that \(\beta_2\) (the effect size of ‘x2’) is 1.3273528 and that it is significantly different from 0 (p-value = 0.0576096). This is actually the slope of the relation between x2 and y when x1 = ‘a’

The model found that \(\beta_3\) (the effect size of the interaction term ‘x1 * x2’) is -2.9719608 and that it is significantly different from 0 (p-value = 0.0034061). This is the difference between the slopes of x2 vs y when x1 = ‘a’ and x2 vs y when x1 = ‘b’.

The simulated values for the regression parameters can be compared to the summary of the

lm()model to get a sense of the model precision:\(\beta_1\) was set to 3 and was estimated as 3.597

\(\beta_2\) was set to 1.7 and was estimated as 1.327

\(\beta_3\) was set to -3 and was estimated as -2.972

Exercise

Modified the code use to simulate a single associated predictor by gradually increasing the error. This is done by increasing the ‘sd’ argument in

error <- rnorm(n = n, sd = 2)Take a look at how larger errors affect inference (so you also need to run the models)

Now replace the error term with

error <- rexp(n = n, rate = 0.2). This is creating an error with an exponential distribution (so non-normal). This is supposed to be problematic for the inferential power of these models. Compare the estimates you got to the simulation values (‘b0’ and ‘b1’). Explore the distribution of residuals (plot(model_name)) for both ‘normal’ and ‘exponential’ error models.Collinearity (the presence of correlated predictors) is supposed to affect the stability of multiple regression. The following code creates two highly collinear predictors (‘x1’ and ‘x2’). The last line of code shows the correlation between them.

# set seed

set.seed(123)

# number of observations

n <- 50

b0 <- -4

b1 <- 3

b2 <- -2

error <- rnorm(n = n, mean = 0, sd = 1)

# random variables

x1 <- rnorm(n = n, mean = 0, sd = 1)

# make x2 very similar to x2 (adding little variation)

x2 <- x1 + rnorm(n = n, mean = 0, sd = 0.3)

cor(x1, x2)## [1] 0.9464161Build a multiple regression model for this data (y ~ x1 + x2). You can use the same code as in the section Adding more than 1 predictor: multiple regression.

How is the inference affected by the presence of collinear predictors? Make the diagnostic plots for this model (

plot(model_name)).Simulate a data set with three predictors in which only two of them are highly collinear. Fit a multiple regression model (y ~ x1 + x2 + x3) for that data and look at how collinearity affects the estimate for the non-collinear predictor.

Extending the linear models to more complex data structures

Generalized linear models

GLM’s allow us to model the association to response variables that do not fit to a normal distribution. Furthermore, they allow to model distributions that more closely resemble the process that generated the data. The following data set creates a data set with a response representing counts (so non-normal):

set.seed(1234)

# sample size

n <- 50

# regression coefficients

b0 <- 1.2

b1 <- 1.3

b2 <- 0

# generate variables

y <- rpois(n = 3, lambda = 6.5) # lambda = average rate of success

x2 <- seq(-0.5, 0.5, , length(y))

x1 <- (log(y) - b0 - b2 * x2)/b1

# create data frame

xy_data_pois <- data.frame(x1, x2, y)

head(xy_data_pois)| x1 | x2 | y |

|---|---|---|

| 0.1433 | -0.5 | 4 |

| 0.5738 | 0.0 | 7 |

| 0.5738 | 0.5 | 7 |

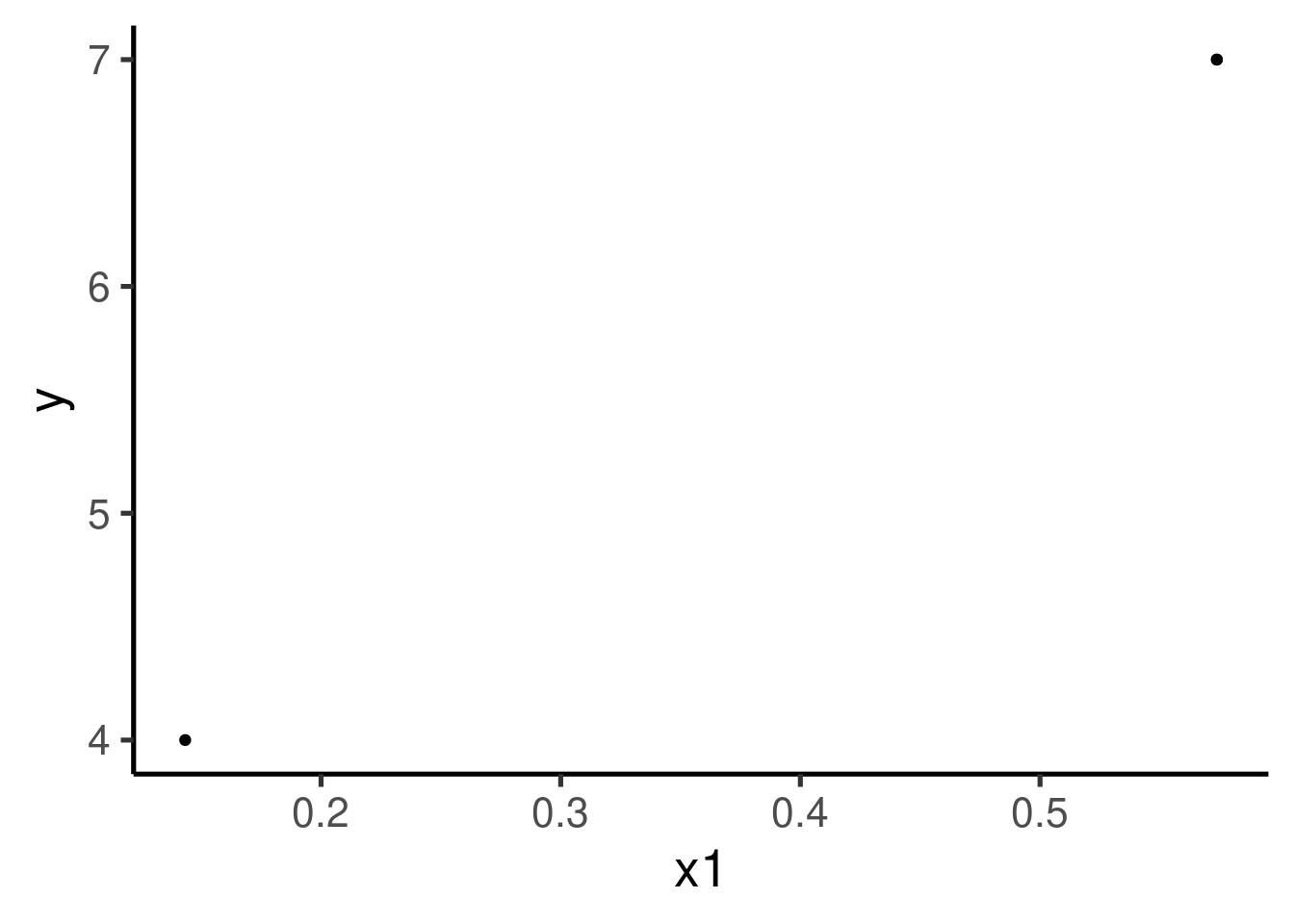

Let also plot ‘x1’ vs ‘y’:

The relation does not seem very linear nor the variance seems to be constant across ‘x1’.

We can relaxed the normal distribution requirement with GLMs.

glm() is a base R function that help us do the trick. For

this example the most appropriate distribution is Poisson. This

can be set in the ‘family’ argument like this:

As you can see the only extra argument compared to lm()

is ‘family’. The rest is just the ‘formula’ and ‘data’ we are already

familiar with. So again, we can build upon of our knowledge on linear

models to extend them to more complex data structures.

We also need to run summary() to get model output:

##

## Call:

## glm(formula = y ~ x1 + x2, family = poisson(), data = xy_data_pois)

##

## Deviance Residuals:

## [1] 0 0 0

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 1.200e+00 1.046e+00 1.147 0.251

## x1 1.300e+00 2.281e+00 0.570 0.569

## x2 1.187e-16 1.069e+00 0.000 1.000

##

## (Dispersion parameter for poisson family taken to be 1)

##

## Null deviance: 1.0725e+00 on 2 degrees of freedom

## Residual deviance: 2.6645e-15 on 0 degrees of freedom

## AIC: 16.881

##

## Number of Fisher Scoring iterations: 3

Coefficients table:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 1.200000e+00 1.046119 1.147097e+00 0.2513416

## x1 1.300000e+00 2.281163 5.698848e-01 0.5687558

## x2 1.186878e-16 1.069045 1.110223e-16 1.0000000The model tells us that \(\beta_1\) (the effect size of ‘x1’) is 1.3 and that it is significantly different from 0 (p-value = 0.5687558). This is actually interpreted as an increase in 1 unit of ‘x1’ results in ‘y’ (rate) by a factor of exp(1.3) = 3.6692967.

The model also tells us that \(\beta_2\) (the effect size of ‘x2’) is 1.1868783^{-16} and that it is significantly different from 0 (p-value = 1). This is means that an increase in 1 unit of ‘x2’ results in ‘y’ (rate) by a factor of exp(1.1868783^{-16}) = 1.

Exercise

- Try fitting a

lm()model (so with a gaussian distribution), compare the results and check the residuals (plot_model(model_name, type = "diag"))

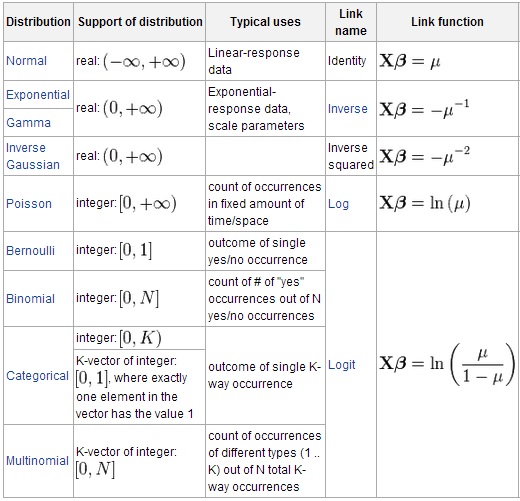

Many other distribution and link functions are available:

Mixed-effect models

Sometimes our data sets include additional levels of structure. For instance, when we sample several individuals from different populations. In those cases variation at the higher structural level (populations) might preclude detecting patterns at the lower level (individuals).

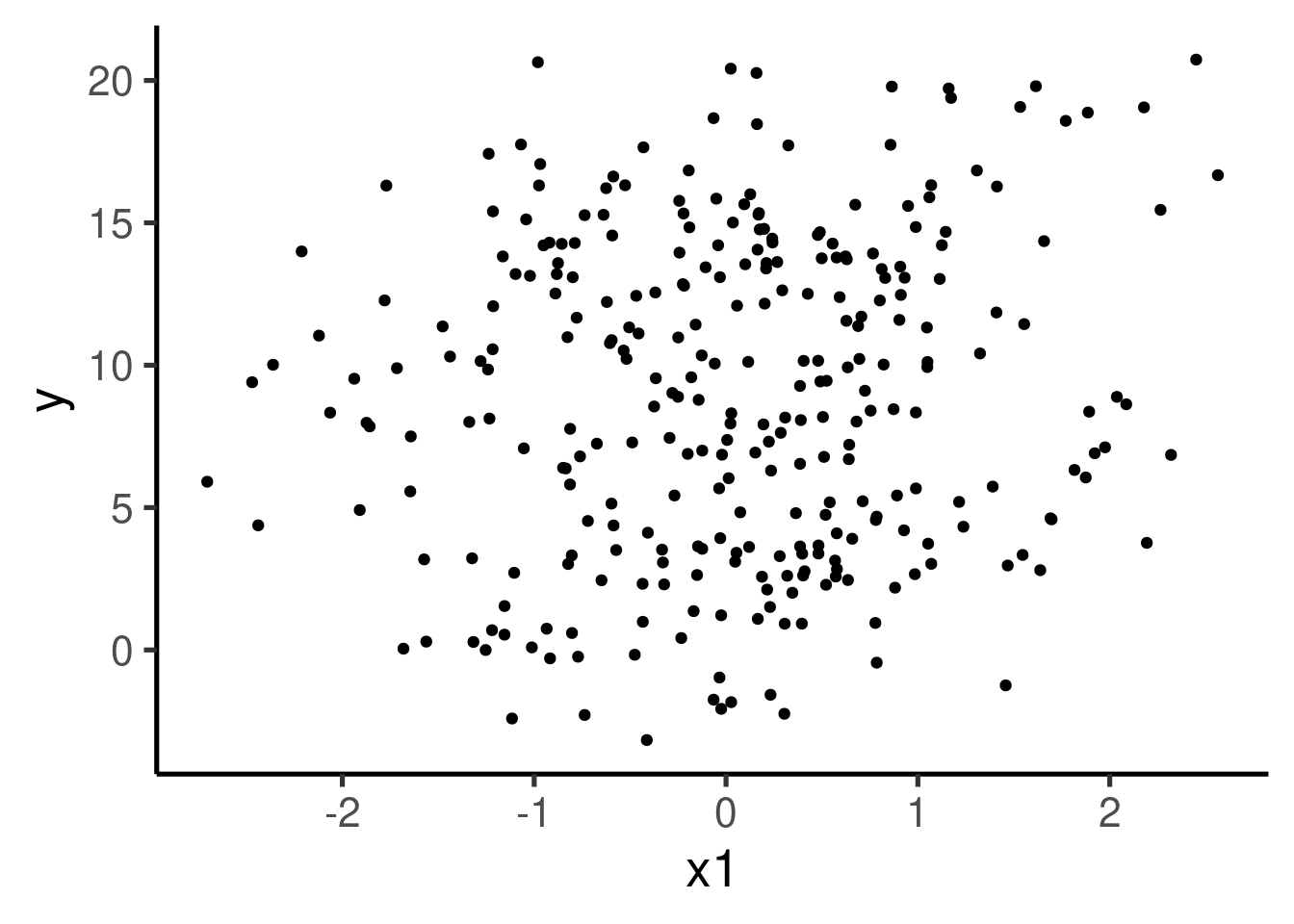

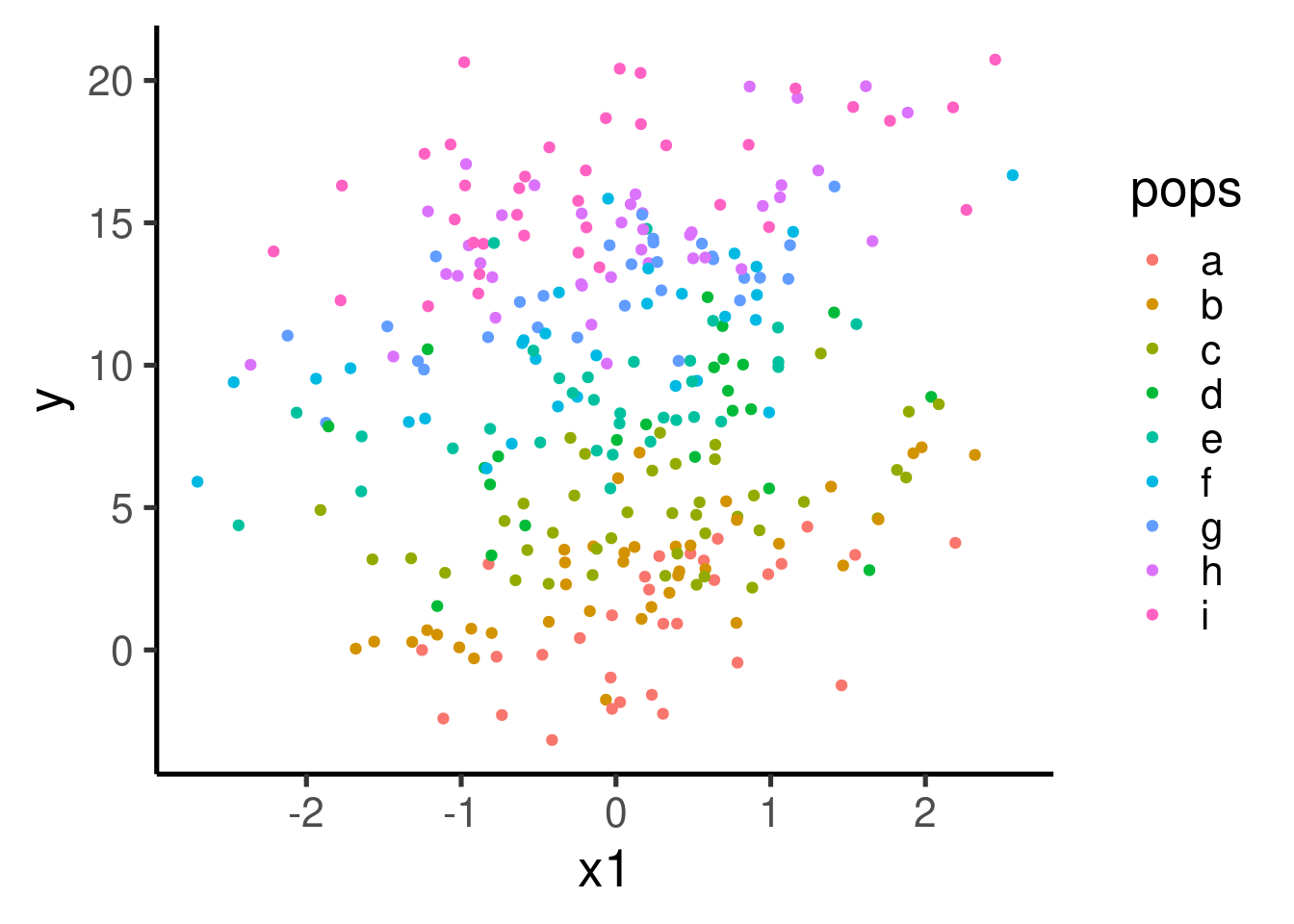

Let’s simulate some data that resembles that scenario. We have two continuous predictor (x1) and a continuous response (y). Each sample comes from 1 of 8 different populations (pops):

# x<- 1 set seed

set.seed(28)

# number of observations

n <- 300

b0 <- 1

b1 <- 1.3

pops <- sample(0:8, size = n, replace = TRUE)

error <- rnorm(n = n, mean = 0, sd = 2)

# random variables

x1 <- rnorm(n = n, mean = 0, sd = 1)

y <- b0 + pops * 2 + b1 * x1 + error

# add letters

pops <- letters[pops + 1]

# create data set

xy_data_pops <- data.frame(x1, y, pops)

head(xy_data_pops, 10)| x1 | y | pops |

|---|---|---|

| 1.5461 | 3.3378 | a |

| 0.5683 | 3.1400 | a |

| -0.6385 | 15.2824 | i |

| 0.9842 | 2.6610 | a |

| 1.0536 | 3.7312 | b |

| -0.8229 | 3.0189 | a |

| -1.3237 | 3.2186 | c |

| 2.1785 | 19.0542 | i |

| 2.2648 | 15.4549 | i |

| -0.7784 | 11.6670 | h |

We can explore the relation between y and x1 with a plot:

can you clearly see the pattern of association between the two variables we used to simulate the data? We can further explore the data with a simple linear regression model:

##

## Call:

## lm(formula = y ~ x1, data = xy_data_pops)

##

## Residuals:

## Min 1Q Median 3Q Max

## -11.7547 -5.1191 0.1855 4.6016 12.4090

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 8.8520 0.3253 27.214 <2e-16 ***

## x1 0.6332 0.3292 1.924 0.0554 .

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 5.628 on 298 degrees of freedom

## Multiple R-squared: 0.01226, Adjusted R-squared: 0.008949

## F-statistic: 3.7 on 1 and 298 DF, p-value: 0.05537

Despite having simulated a non-zero \(\beta_1\) we have no significant association according to this model and the estimated for \(\beta_1\) is far from the simulated one. This poor inference is due to the fact that we are ignoring an important feature of our data, the grouping of samples in ‘populations’.

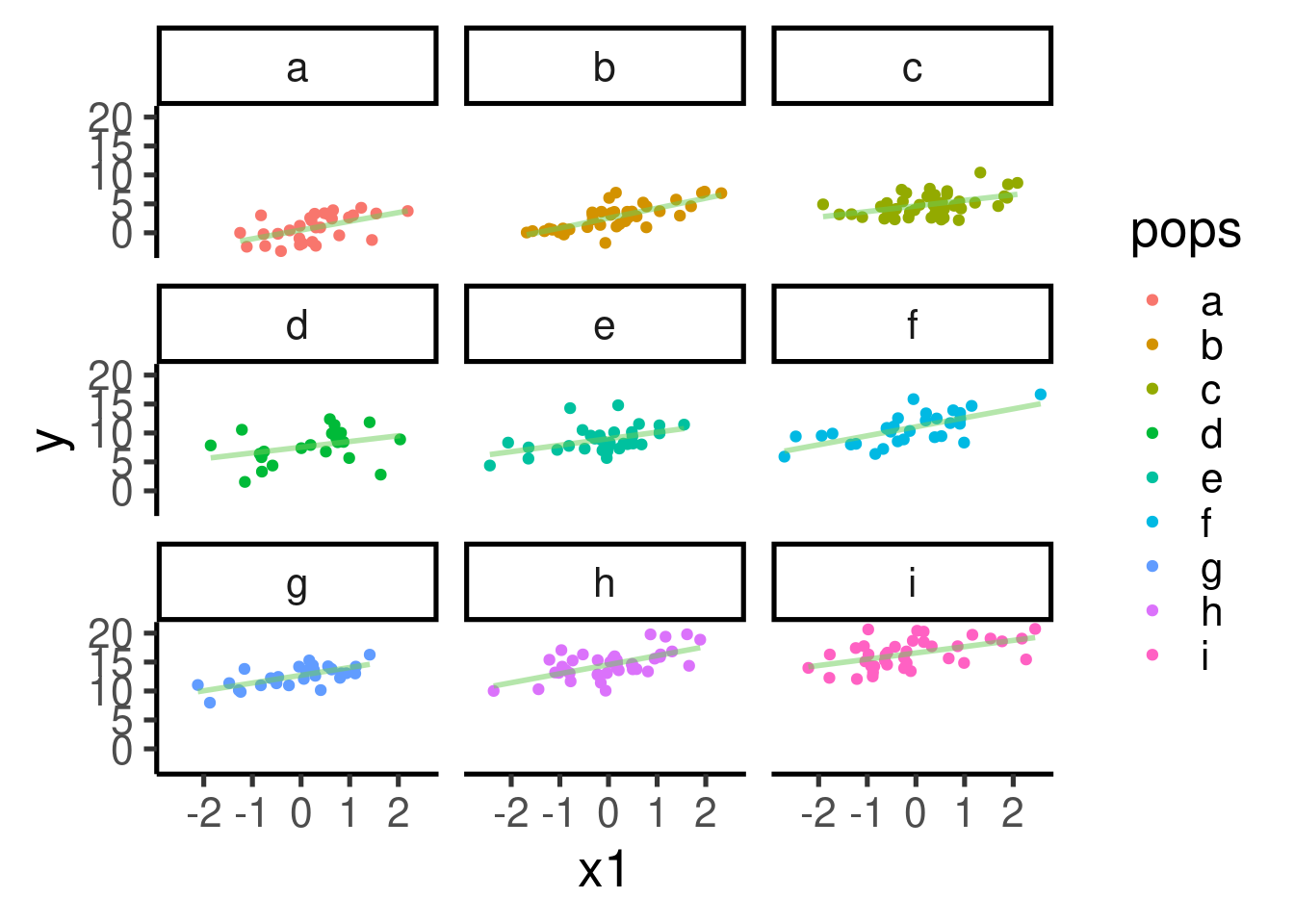

Mixed-effect models (a.k.a. multi-level models or varying effect models) can help us account for these additional features, significantly improving our inferential power. Let’s color each of the populations to see how the variables co-vary for each data sub-group:

There seems to be a clear pattern of positive association between x1 and y. The pattern becomes a bit more obvious if we plot each population in its own panel:

ggplot(data = xy_data_pops, aes(x = x1, y = y, color = pops)) + geom_point() +

facet_wrap(~pops) + geom_smooth(method = "lm", se = FALSE)

Let’s build a mixed-effect model using population as a varying intercept:

## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: y ~ x1 + (1 | pops)

##

## REML criterion at convergence: 1296.4

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -3.5236 -0.6575 -0.0316 0.6124 3.2454

##

## Random effects:

## Groups Name Variance Std.Dev.

## pops (Intercept) 30.073 5.484

## Residual 3.762 1.940

## Number of obs: 300, groups: pops, 9

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 8.7881 1.8315 8.0031 4.798 0.00136 **

## x1 1.3166 0.1151 290.0679 11.440 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## x1 -0.003

The model correctly detected the simulated pattern and the estimate for \(\beta_1\) (1.3166493) is very close to the simulated value.

References

Session information

## R version 4.1.1 (2021-08-10)

## Platform: x86_64-pc-linux-gnu (64-bit)

## Running under: Ubuntu 20.04.2 LTS

##

## Matrix products: default

## BLAS: /usr/lib/x86_64-linux-gnu/blas/libblas.so.3.9.0

## LAPACK: /usr/lib/x86_64-linux-gnu/lapack/liblapack.so.3.9.0

##

## locale:

## [1] LC_CTYPE=es_ES.UTF-8 LC_NUMERIC=C

## [3] LC_TIME=es_CR.UTF-8 LC_COLLATE=es_ES.UTF-8

## [5] LC_MONETARY=es_CR.UTF-8 LC_MESSAGES=es_ES.UTF-8

## [7] LC_PAPER=es_CR.UTF-8 LC_NAME=C

## [9] LC_ADDRESS=C LC_TELEPHONE=C

## [11] LC_MEASUREMENT=es_CR.UTF-8 LC_IDENTIFICATION=C

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] sjPlot_2.8.10 lmerTest_3.1-3 lme4_1.1-27.1 Matrix_1.3-4

## [5] viridis_0.6.2 viridisLite_0.4.0 ggplot2_3.3.5 knitr_1.37

## [9] kableExtra_1.3.4

##

## loaded via a namespace (and not attached):

## [1] nlme_3.1-152 insight_0.17.1 webshot_0.5.2

## [4] httr_1.4.2 numDeriv_2016.8-1.1 tools_4.1.1

## [7] backports_1.2.1 bslib_0.2.5.1 utf8_1.2.2

## [10] R6_2.5.1 sjlabelled_1.2.0 DBI_1.1.1

## [13] mgcv_1.8-36 colorspace_2.0-3 withr_2.5.0

## [16] tidyselect_1.1.1 gridExtra_2.3 emmeans_1.7.4-1

## [19] curl_4.3.2 compiler_4.1.1 performance_0.9.0

## [22] cli_3.2.0 rvest_1.0.1 formatR_1.12

## [25] xml2_1.3.2 labeling_0.4.2 bayestestR_0.12.1

## [28] sass_0.4.0 scales_1.1.1 mvtnorm_1.1-2

## [31] systemfonts_1.0.2 stringr_1.4.0 digest_0.6.29

## [34] foreign_0.8-81 minqa_1.2.4 rmarkdown_2.10

## [37] svglite_2.0.0 rio_0.5.27 pkgconfig_2.0.3

## [40] htmltools_0.5.2 fastmap_1.1.0 highr_0.9

## [43] readxl_1.3.1 rlang_1.0.2 rstudioapi_0.13

## [46] jquerylib_0.1.4 generics_0.1.0 farver_2.1.0

## [49] jsonlite_1.7.2 zip_2.2.0 dplyr_1.0.7

## [52] car_3.0-11 magrittr_2.0.2 parameters_0.14.0

## [55] Rcpp_1.0.8 munsell_0.5.0 fansi_1.0.2

## [58] abind_1.4-5 lifecycle_1.0.1 stringi_1.7.6

## [61] yaml_2.3.5 carData_3.0-4 MASS_7.3-54

## [64] grid_4.1.1 sjmisc_2.8.9 forcats_0.5.1

## [67] crayon_1.5.0 lattice_0.20-44 ggeffects_1.1.2

## [70] haven_2.4.3 splines_4.1.1 sjstats_0.18.1

## [73] hms_1.1.0 klippy_0.0.0.9500 pillar_1.7.0

## [76] boot_1.3-28 estimability_1.3 effectsize_0.4.5

## [79] glue_1.6.2 evaluate_0.15 data.table_1.14.0

## [82] modelr_0.1.8 vctrs_0.3.8 nloptr_1.2.2.2

## [85] cellranger_1.1.0 gtable_0.3.0 purrr_0.3.4

## [88] tidyr_1.1.3 assertthat_0.2.1 datawizard_0.4.1

## [91] openxlsx_4.2.4 xfun_0.30 xtable_1.8-4

## [94] broom_0.7.9 coda_0.19-4 tibble_3.1.6

## [97] ellipsis_0.3.2